Getting Started with Talend Component Kit

Talend components are functional objects that let you easily perform operations on given sets of data, through a comprehensive graphical environment.

Talend Component Kit is a framework designed to help you developing new Talend components. From generating a Java project and coding until testing and using them in Talend applications, use this toolkit to ensure your components fit your needs.

If you are new to Talend component development or with the Talend Component Kit framework, you can start by reading the following articles to get a better overview of the framework design.

You can also learn the basics of component development by following this tutorial:

To learn more about Talend components in general and about existing components that you can already use, refer to the following documents:

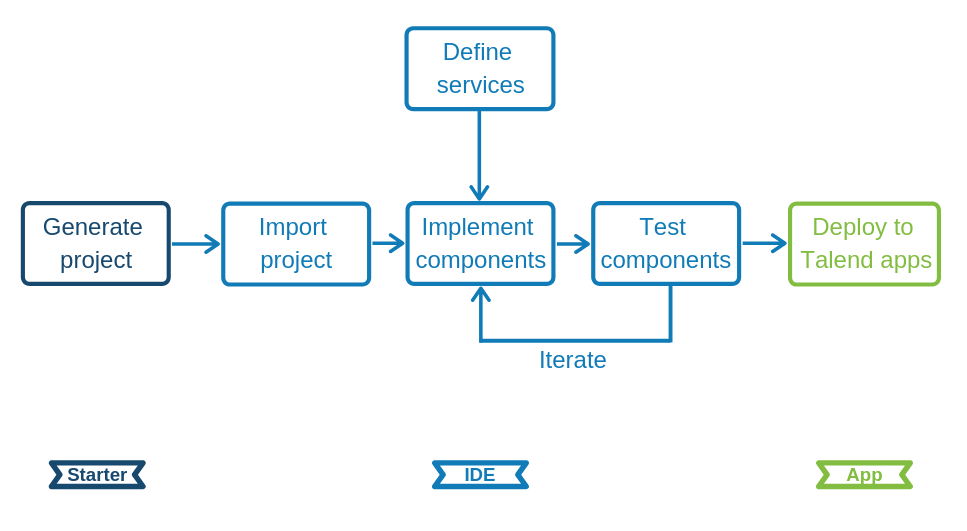

Talend Component Kit methodology

Talend Component Kit is a framework designed to simplify the development of components at two levels:

-

Runtime: Runtime is about injecting the specific component code into a job or pipeline. The framework helps unify as much as possible the code required to run in Data Integration (DI) and BEAM environments.

-

Graphical interface: The framework helps unify the code required to be able to render the component in a browser (web) or in the Eclipse-based Studio (SWT).

Before being able to develop new components, check the prerequisites to make sure that you have all you need to get started.

Developing new components using the framework includes:

-

Creating a project using the starter or the Talend IntelliJ plugin. This step allows to build the skeleton of the project. It consists in:

-

Defining the general configuration model for each component in your project

-

Generating and downloading the project archive from the starter

-

Compiling the project

-

-

Importing the compiled project in your IDE. This step is not required if you have generated the project using the IntelliJ plugin.

-

Implementing the components, including:

-

Registering the component by specifying its metadata: family, categories, version, icon, type, and name.

-

Defining the layout and configurable part of the components

-

Defining the partition mapper for Input components

-

Implementing the source logic (producer) for Input components

-

Defining the processor for Output components

-

-

Deploying the components to Talend Studio or Cloud applications

Some additional configuration steps can be necessary according to your requirements:

-

Defining services that can be reused in several components

General component execution logic

Each type of component has its own execution logic. The same basic logic is applied to all components of the same type, and is then extended to implement each component specificities. The project generated from the starter already contains the basic logic for each component.

Talend Component Kit framework relies on several primitive components.

All components can use @PostConstruct and @PreDestroy annotations to initialize or release some underlying resource at the beginning and the end of a processing.

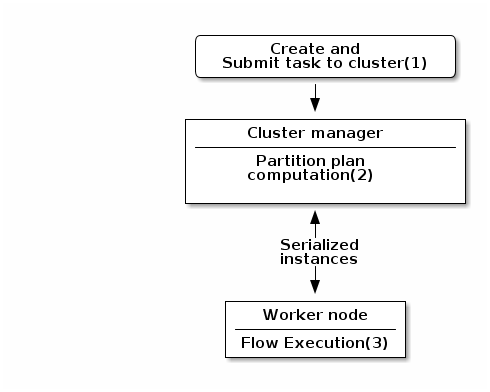

In distributed environments, class constructor are called on cluster manager nodes. Methods annotated with @PostConstruct and @PreDestroy are called on worker nodes. Thus, partition plan computation and pipeline tasks are performed on different nodes.

|

-

The created task is a JAR file containing class information, which describes the pipeline (flow) that should be processed in cluster.

-

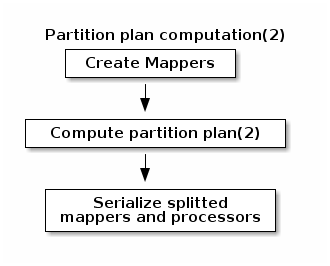

During the partition plan computation step, the pipeline is analyzed and split into stages. The cluster manager node instantiates mappers/processors, gets estimated data size using mappers, and splits created mappers according to the estimated data size.

All instances are then serialized and sent to the worker node. -

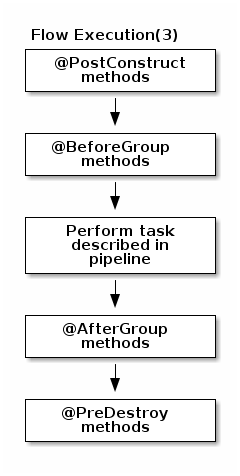

Serialized instances are received and deserialized. Methods annotated with

@PostConstructare called. After that, pipeline execution starts. The@BeforeGroupannotated method of the processor is called before processing the first element in chunk.

After processing the number of records estimated as chunk size, the@AfterGroupannotated method of the processor is called. Chunk size is calculated depending on the environment the pipeline is processed by. Once the pipeline is processed, methods annotated with@PreDestroyare called.

| All the methods managed by the framework must be public. Private methods are ignored. |

| The framework is designed to be as declarative as possible but also to stay extensible by not using fixed interfaces or method signatures. This allows to incrementally add new features of the underlying implementations. |

Talend Component Kit Overview

Talend Component Kit is a toolkit based on Java and designed to simplify the development of components at two levels:

-

Runtime: Runtime is about injecting the specific component code into a job or pipeline. The framework helps unify as much as possible the code required to run in Data Integration (DI) and BEAM environments.

-

Graphical interface: The framework helps unify the code required to be able to render the component in a browser (web) or in the Eclipse-based Studio (SWT).

Framework tools

The Talend Component Kit framework is made of several tools designed to help you during the component development process. It allows to develop components that fit in both Java web UIs.

-

Starter: Generate the skeleton of your development project using a user-friendly interface. The Talend Component Kit Starter is available as a web tool or as a plugin for the IntelliJ IDE.

-

Component API: Check all classes available to implement components.

-

Build tools: The framework comes with Maven and Gradle wrappers, which allow to always use the version of Maven or Gradle that is right for your component development environment and version.

-

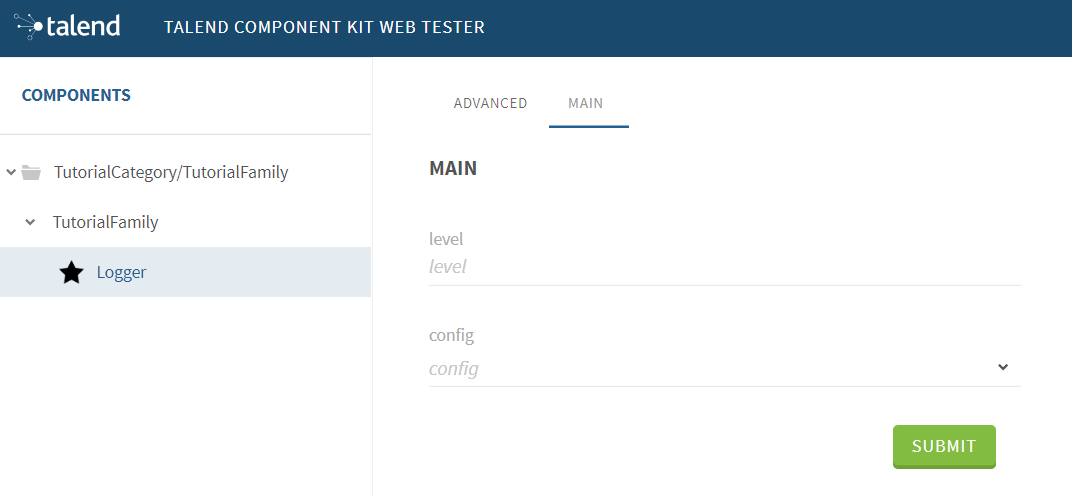

Testing tools: Test components before integrating them into Talend Studio or Cloud applications. Testing tools include the Talend Component Kit Web Tester, which allows to check the web UI of your components on your local machine.

You can find more details about the framework design in this document.

Creating your first component

This tutorial walks you through all the required steps to get started with Talend Component Kit, from the creation of a simple component to its integration into Talend Open Studio.

The component created in this tutorial is a simple output component that receives data from the previous component and displays it in the logs, along with an extra information entered by a user.

Once the prerequisites completed, this tutorial should take you about 20 minutes.

Prerequisites

This tutorial aims at helping you to create your very first component. But before, get your development environment ready:

-

Download and install a Java JDK 1.8 or greater.

-

Download and install Talend Open Studio. For example, from Sourceforge.

-

Download and install IntelliJ.

-

Download the Talend Component Kit plugin for IntelliJ. The detailed installation steps for the plugin are available in this document.

Generating a simple component project

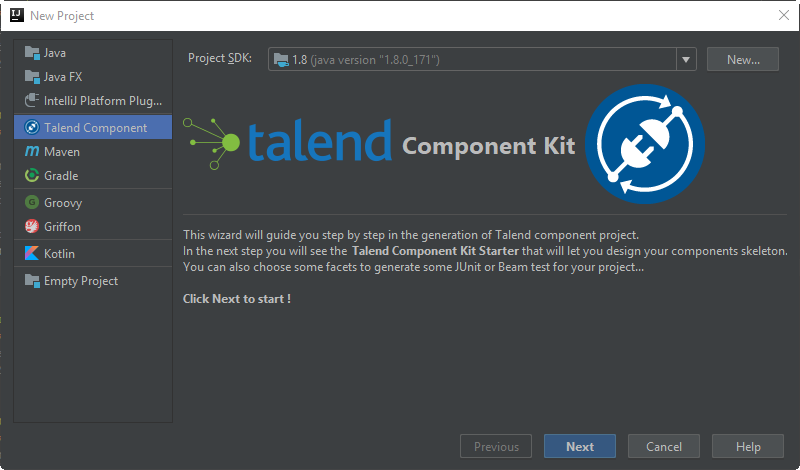

The first step in this tutorial is to generate a project containing a simple output component using the Starter included in the Talend Component Kit plugin for IntelliJ.

-

Start IntelliJ and create a new project. In the available options, you should see Talend Component.

-

Make sure that a Project SDK is selected. Then, select Talend Component and click Next.

The Talend Component Kit Starter opens. -

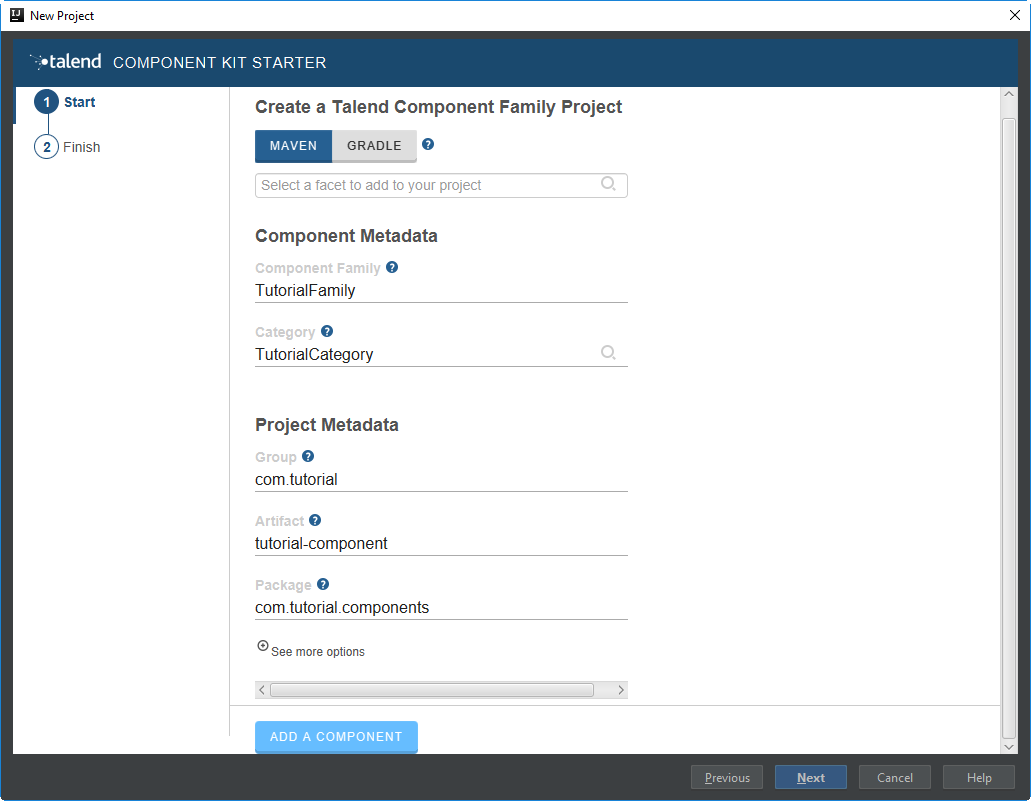

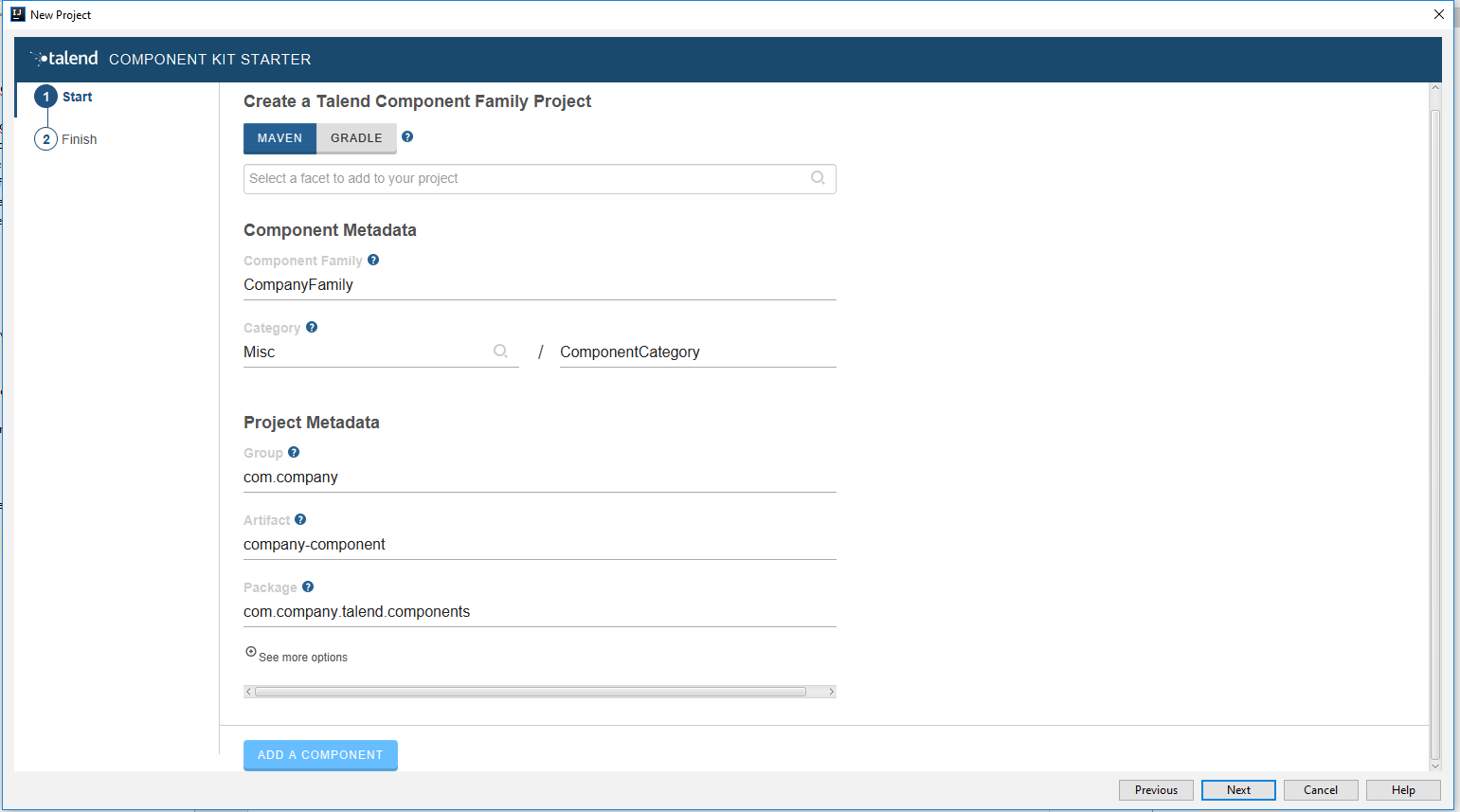

Enter the project details. The goal here is to define the component and project metadata. Change the default values as follows:

-

The Component Family and the Category will be used later in Talend Open Studio to find the new component.

-

The project metadata are mostly used to identify the project structure. A common practice is to replace 'company' in the default value by a value of your own, like your domain name.

-

-

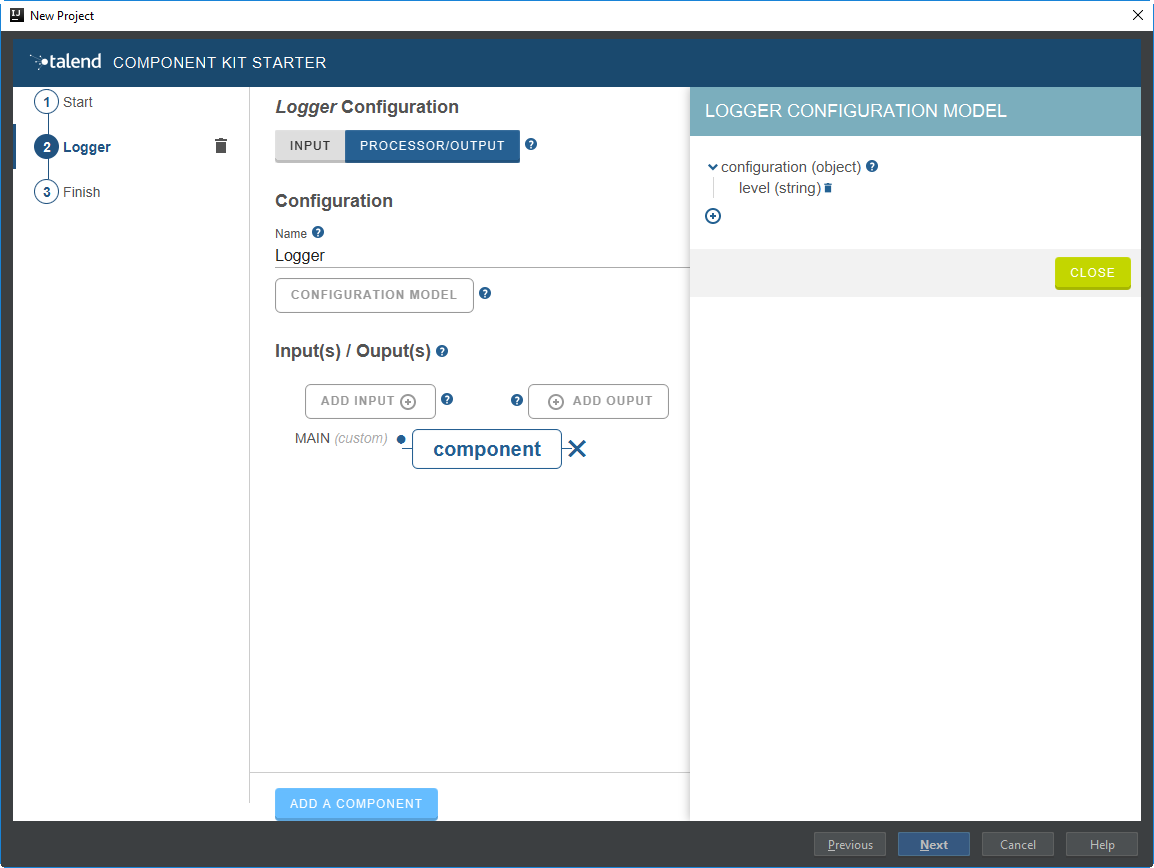

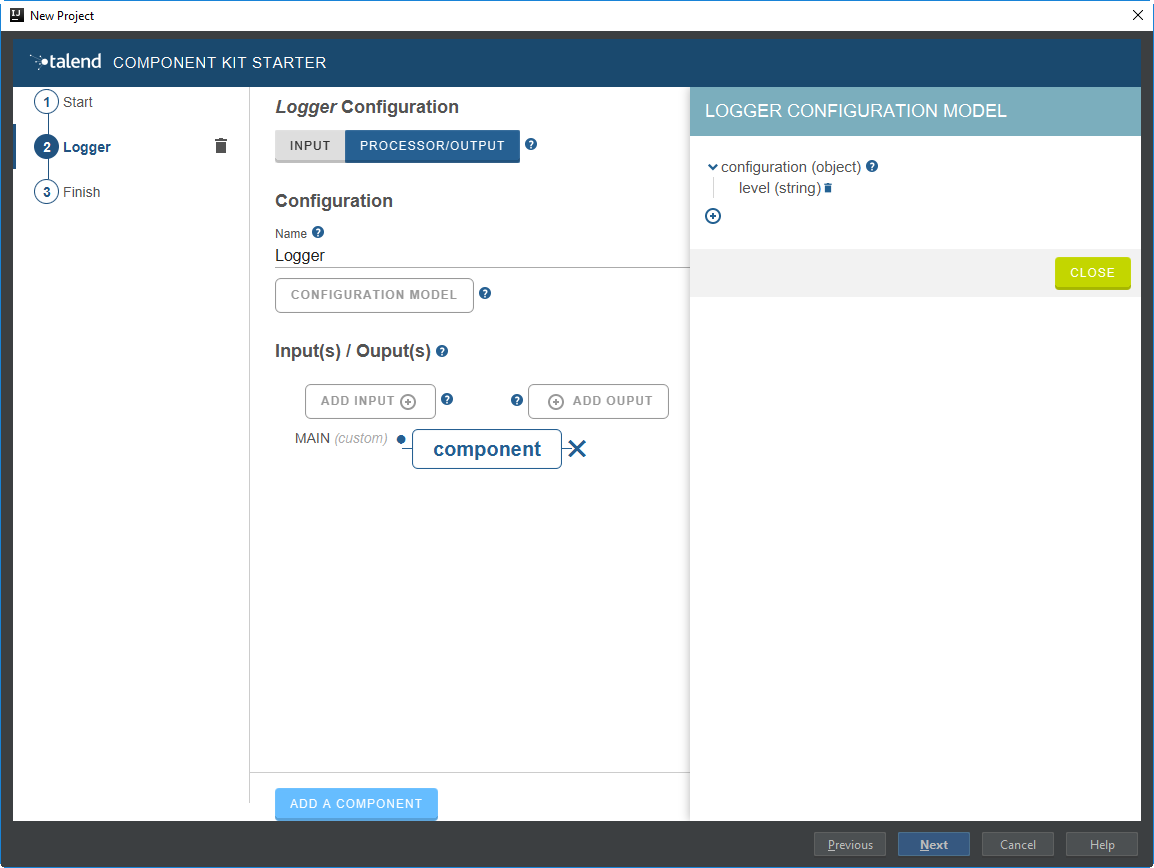

Once the metadata is filled, select ADD A COMPONENT. A new screen is displayed in the Talend Component Kit Starter that lets you define the generic configuration of the component.

-

Select PROCESSOR/OUTPUT and enter a valid Java name for the component. For example, Logger.

-

Select CONFIGURATION MODEL and add a string field named

level. This input field will be used in the component configuration to enter additional information to display in the logs.

-

In the Input(s) / Output(s) section, click the default MAIN input branch to access its detail, and toggle the Generic option to specify that the component can receive any type of data. Leave the Name of the branch with its default

MAINvalue.

By default, when selecting PROCESSOR/OUTPUT, there is one input branch and no output branch for the component, which is fine in the case of this tutorial. A processor without any output branch is considered an output component. -

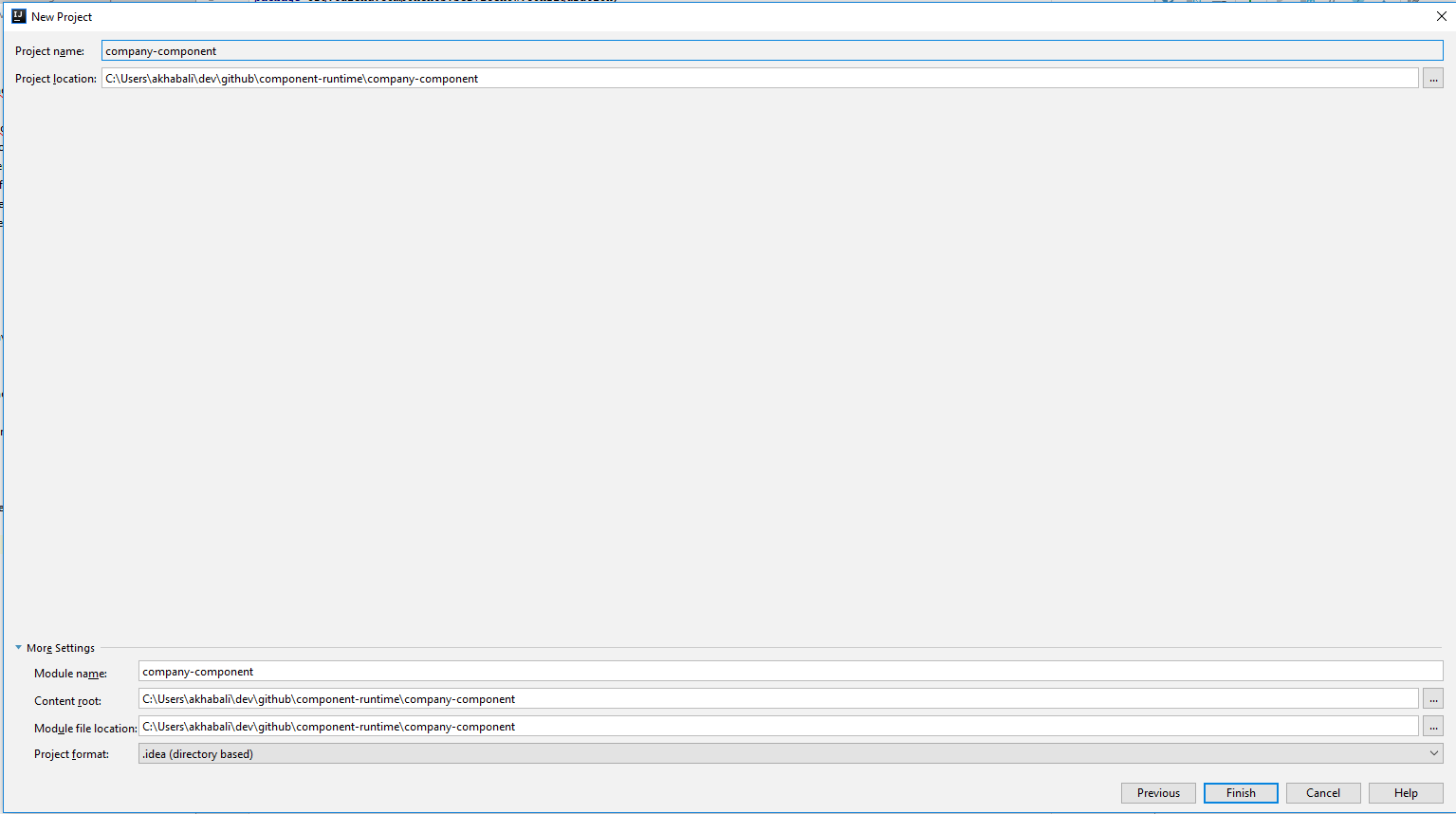

Click Next and check the name and location of your project, then click Finish to generate the project in the IDE.

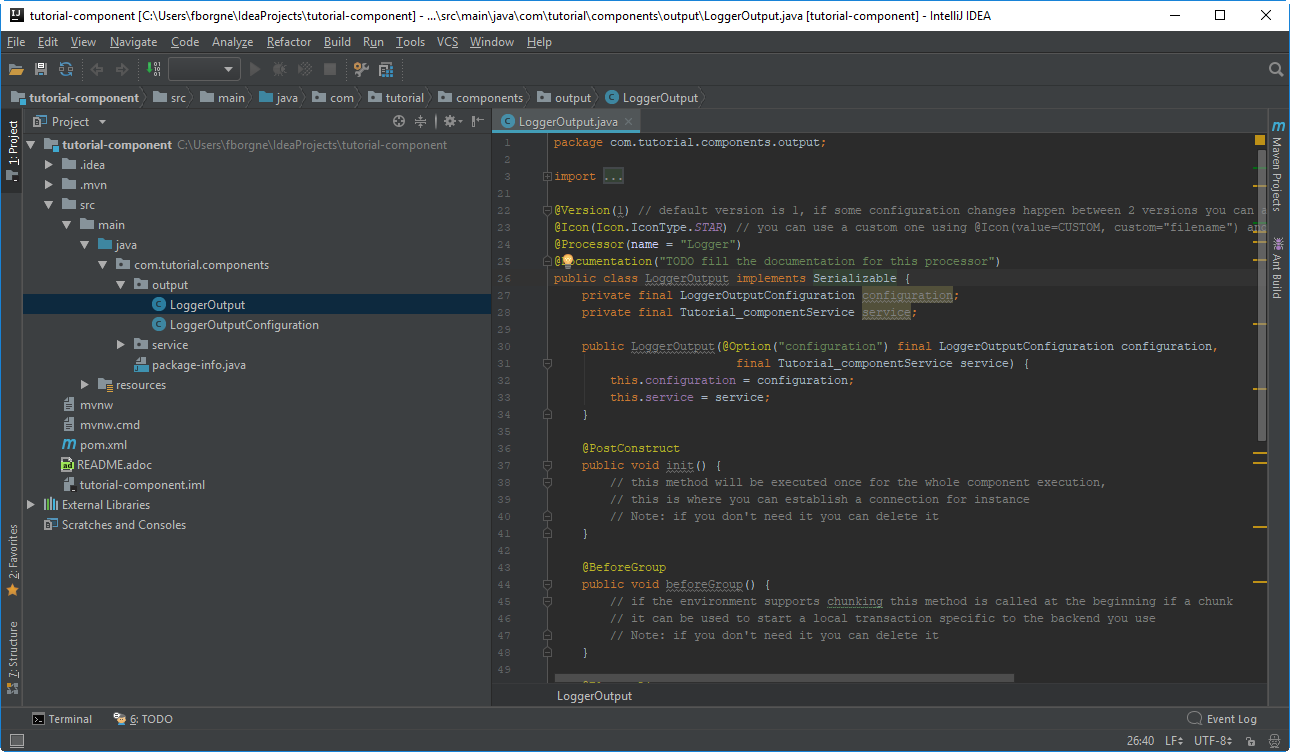

At this point, your component is technically already ready to be compiled and deployed to Talend Open Studio. But first, have a look at the generated project:

-

Two classes based on the name and type of component defined in the Talend Component Kit Starter have been generated:

-

LoggerOutput is where the component logic is defined

-

LoggerOutputConfiguration is where the component layout and configurable fields are defined, including the level string field that was defined earlier in the configuration model of the component.

-

-

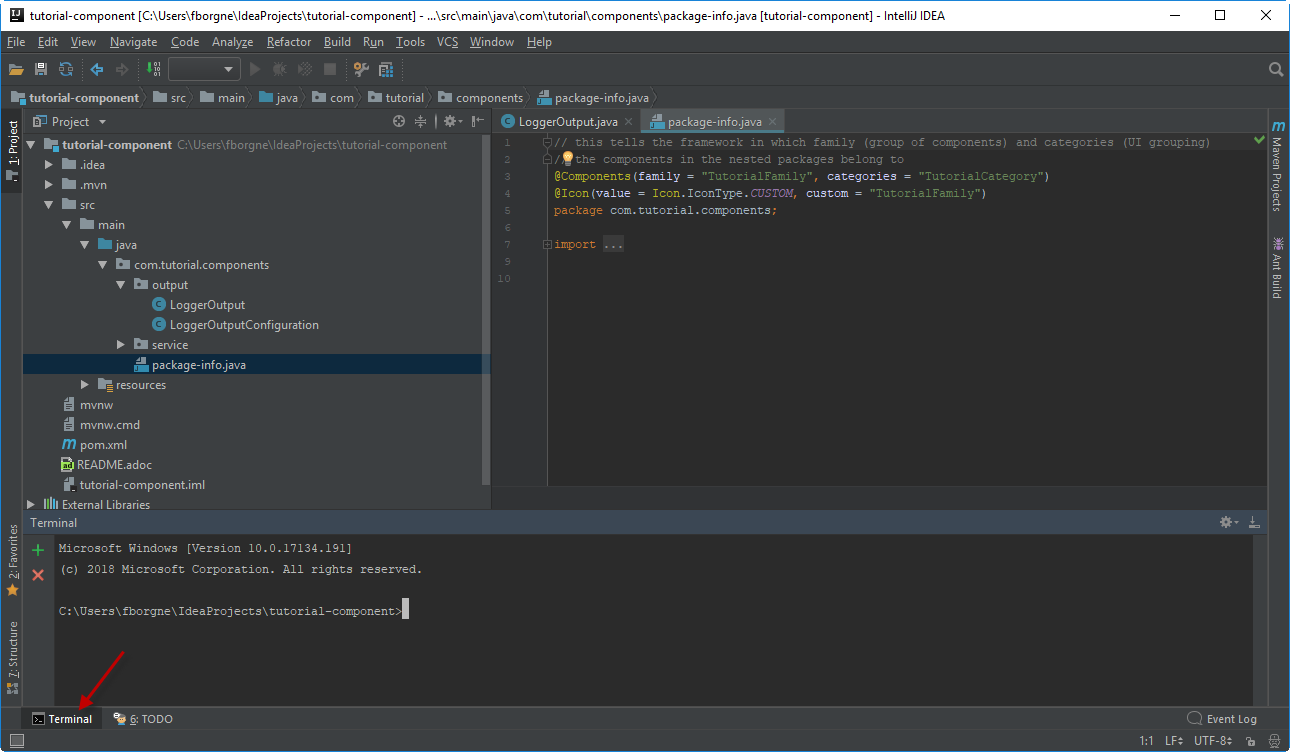

The package-info.java file contains the component metadata defined in the Talend Component Kit Starter, like the family and category.

-

You can notice as well that the elements in the tree structure are named after the project metadata defined in the Talend Component Kit Starter.

These files are the starting point if you later need to edit the configuration, logic, and metadata of your component.

There is more that you can do and configure with the Talend Component Kit Starter. This tutorial covers only the basics. You can find more information in this document.

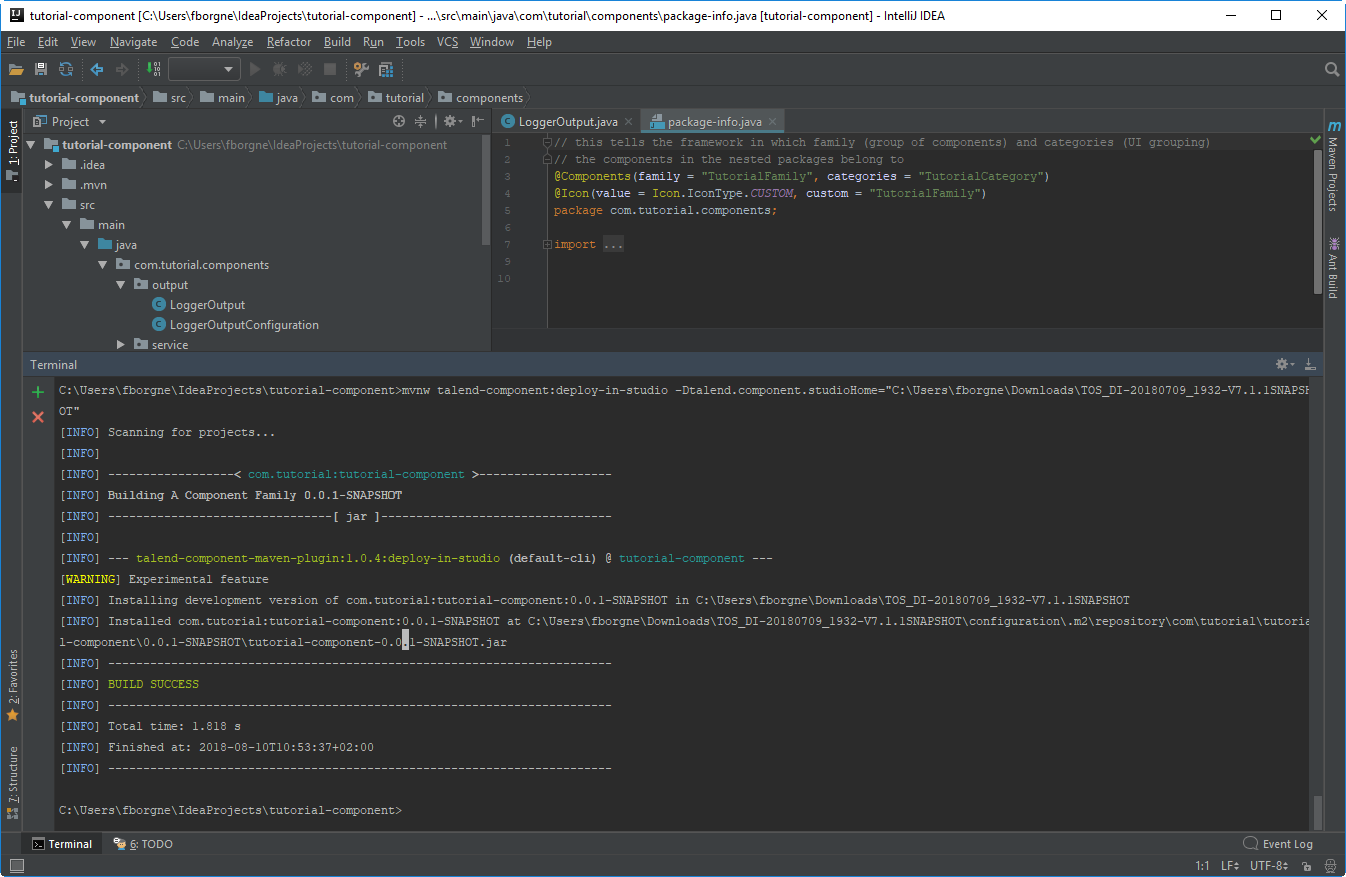

Compiling and deploying the component to Talend Open Studio

Without any modification in the component code, you can compile the project and deploy the component to a local instance of Talend Open Studio.

This way, it will be easy to check that what is visible in the Studio is what is intended.

Before starting to run any command, make sure Talend Open Studio is not running.

-

From your component project in IntelliJ, open a terminal.

There, you can see that the terminal opens directly at the root of the project. All commands shown in this tutorial are performed from this folder.

-

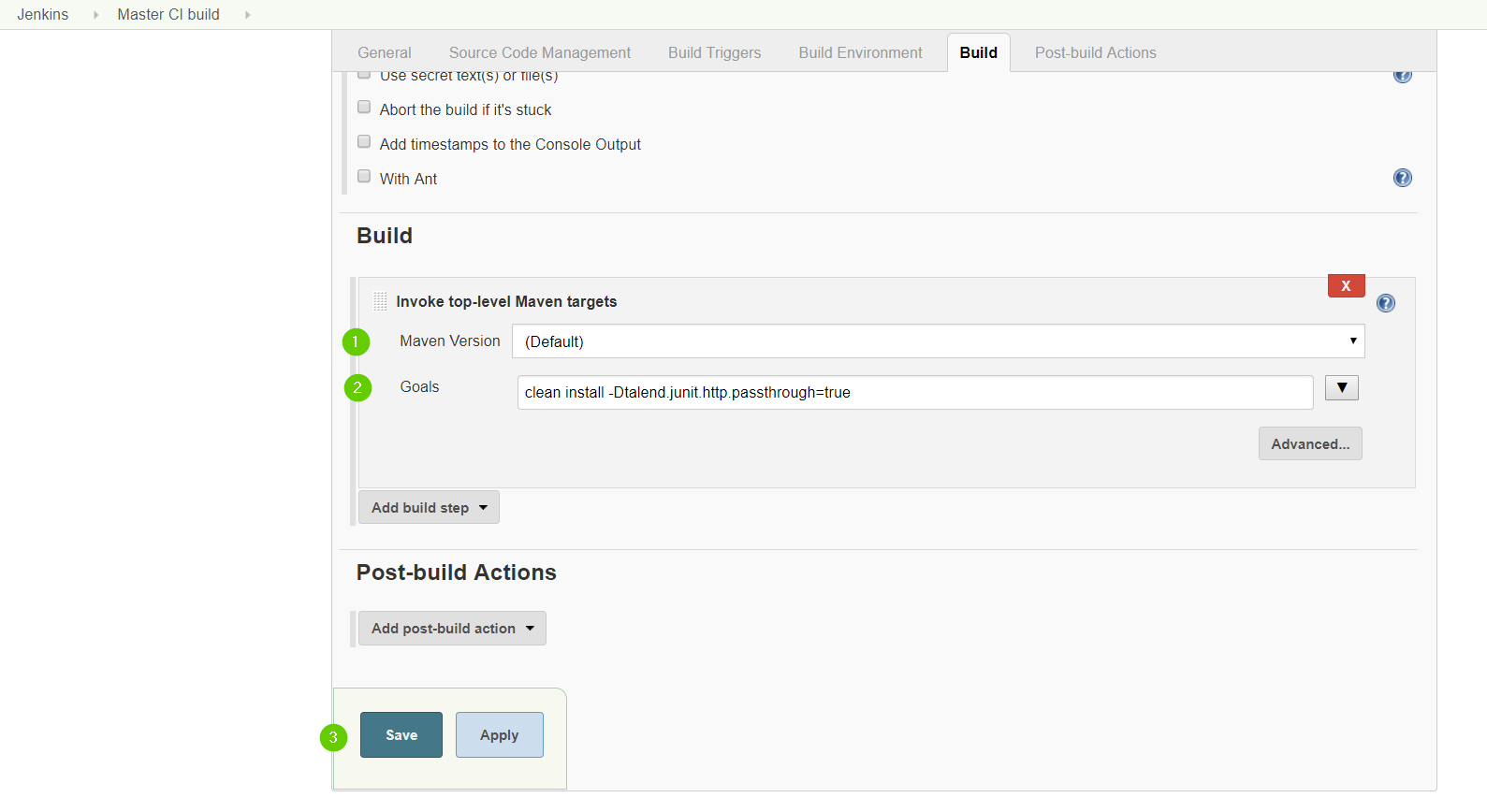

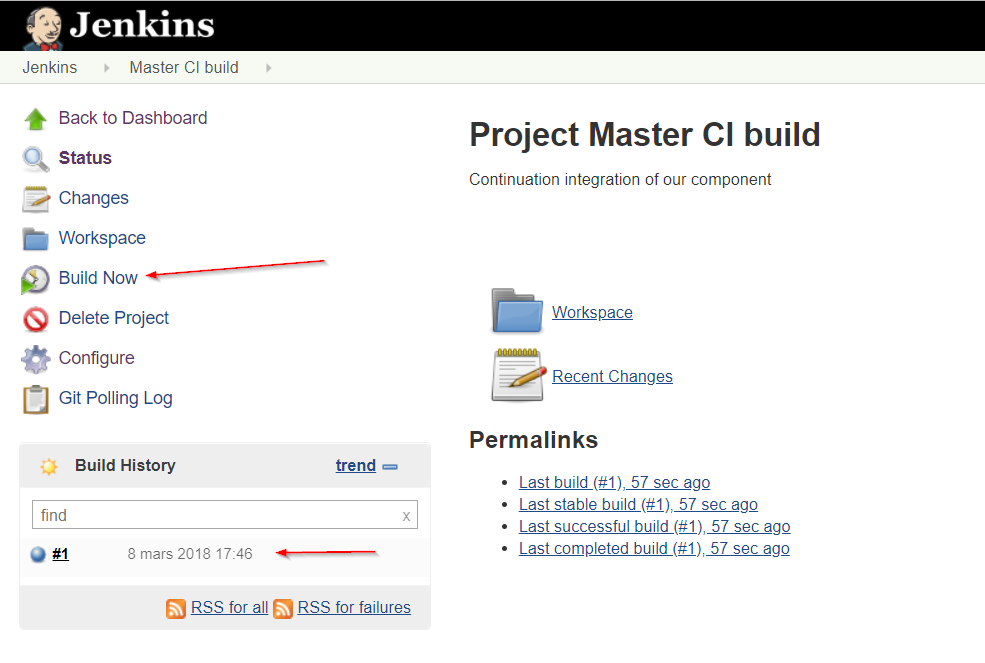

Compile the project by running the following command:

mvnw clean install.

Themvnwcommand refers to the Maven wrapper that is shipped with Talend Component Kit. It allows to use the right version of Maven for your project without having to install it manually beforehand. An equivalent wrapper is available for Gradle. -

Once the command is executed and you see BUILD SUCCESS in the terminal, deploy the component to your local instance of Talend Open Studio using the following command:

mvnw talend-component:deploy-in-studio -Dtalend.component.studioHome="<path to Talend Open Studio home>"Replace the path by your own value. If the path contains spaces (for example, Program Files), enclose it with double quotes. -

Make sure the build is successful.

-

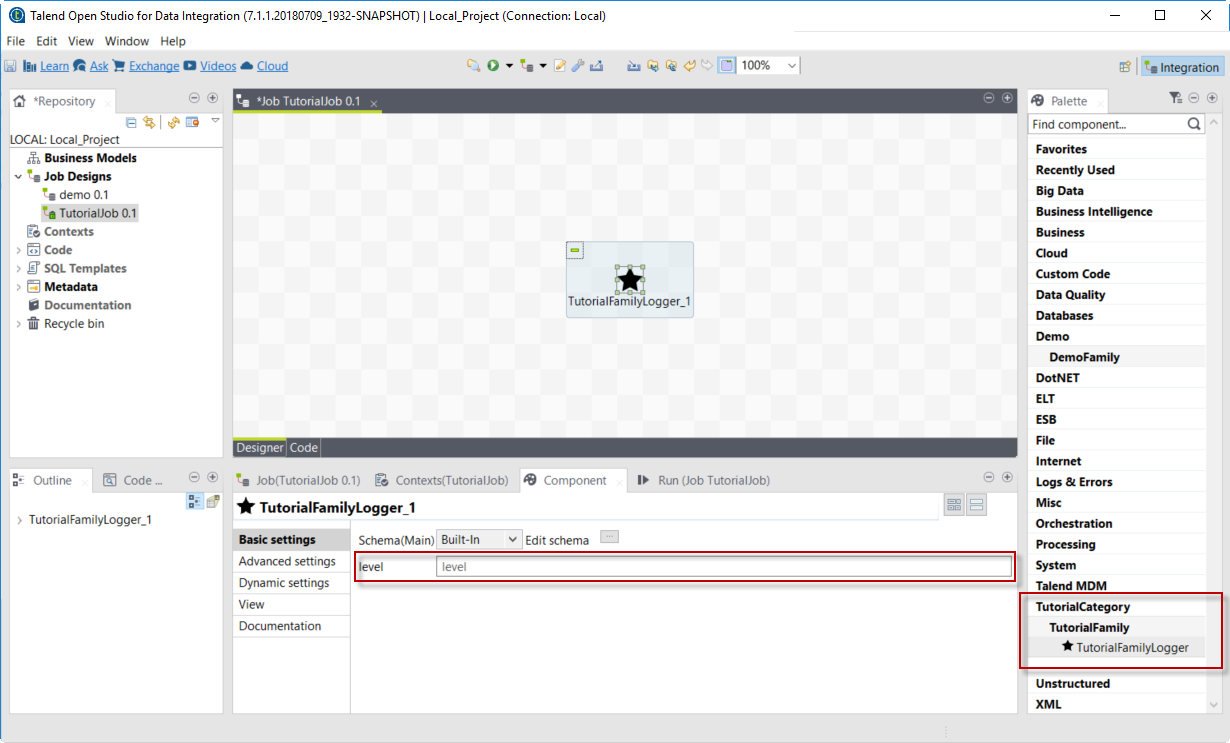

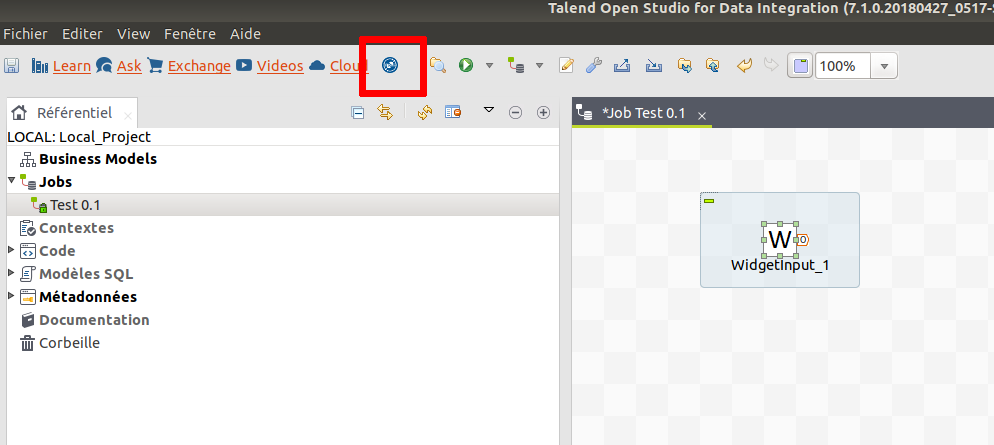

Open Talend Open Studio and create a new Job:

-

The new component is present inside the new family and category that were specified in the Talend Component Kit Starter. You can add it to your job and open its settings.

-

Notice that the level field that was specified in the configuration model of the component in the Talend Component Kit Starter is present.

-

At this point, your new component is available in Talend Open Studio, and its configurable part is already set. But the component logic is still to be defined.

As a reminder, the initial goal of this component is to output the information it received in input in the logs of the job.

Go to the next section to learn how to define a simple logic.

Editing the component

You can now edit the component to implement a simple logic aiming at reading the data contained in the input branch of the component, to display it the execution logs of the job. The value of the level field of the component also needs to be displayed and changed to uppercase.

-

Save the job created earlier and close Talend Open Studio.

-

Back in IntelliJ open the LoggerOutput class. This is the class where the component logic can be defined.

-

Look for the

@ElementListenermethod. It is already present and references the default input branch that was defined in the Talend Component Kit Starter, but it is not complete yet. -

To be able to log the data in input to the console, add the following lines:

//Log to the console System.out.println("["+configuration.getLevel().toUpperCase()+"] "+defaultInput);The

@ElementListenermethod now looks as follows:@ElementListener public void onNext( @Input final JsonObject defaultInput) { // this is the method allowing you to handle the input(s) and emit the output(s) // after some custom logic you put here, to send a value to next element you can use an // output parameter and call emit(value). //Log to the console System.out.println("["+configuration.getLevel().toUpperCase()+"] "+defaultInput); }-

Open the Terminal again to compile the project and deploy the component again. To do that, run successively the two following commands:

-

mvnw clean install -

`mvnw talend-component:deploy-in-studio -Dtalend.component.studioHome="<path to Talend Open Studio home>"

-

-

The update of the component logic should now be deployed to the Studio. After restarting the Studio, you will be ready to build a job and use your component for the first time.

To learn the different possibilities and methods available to develop more complex logics, refer to this document.

If you want to avoid having to close and re-open Talend Open Studio every time you need to make an edit, you can enable the developer mode, as explained in this document.

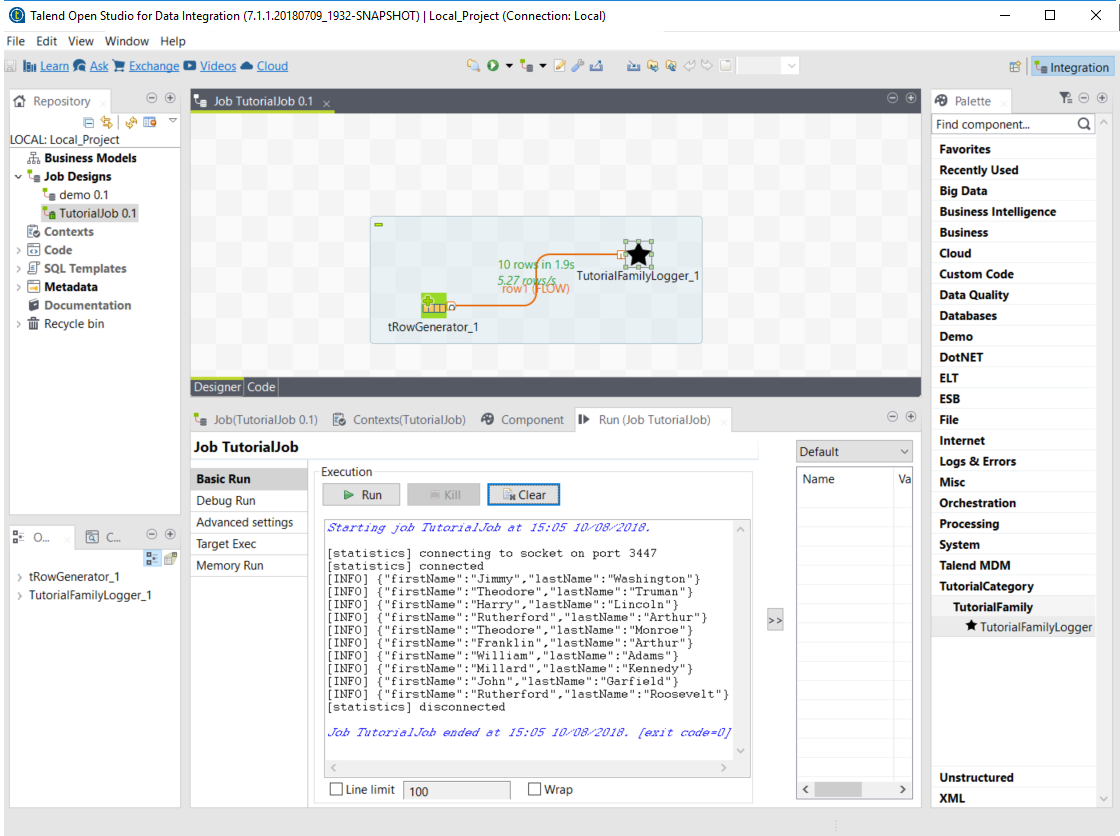

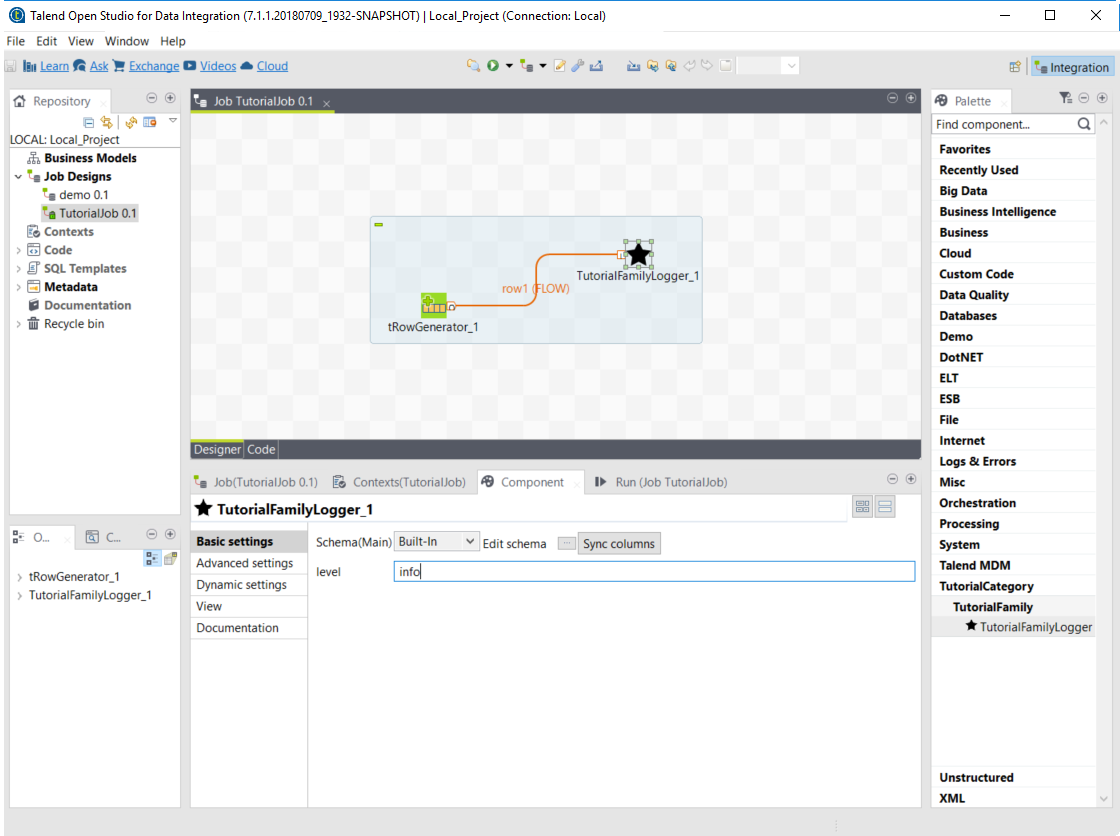

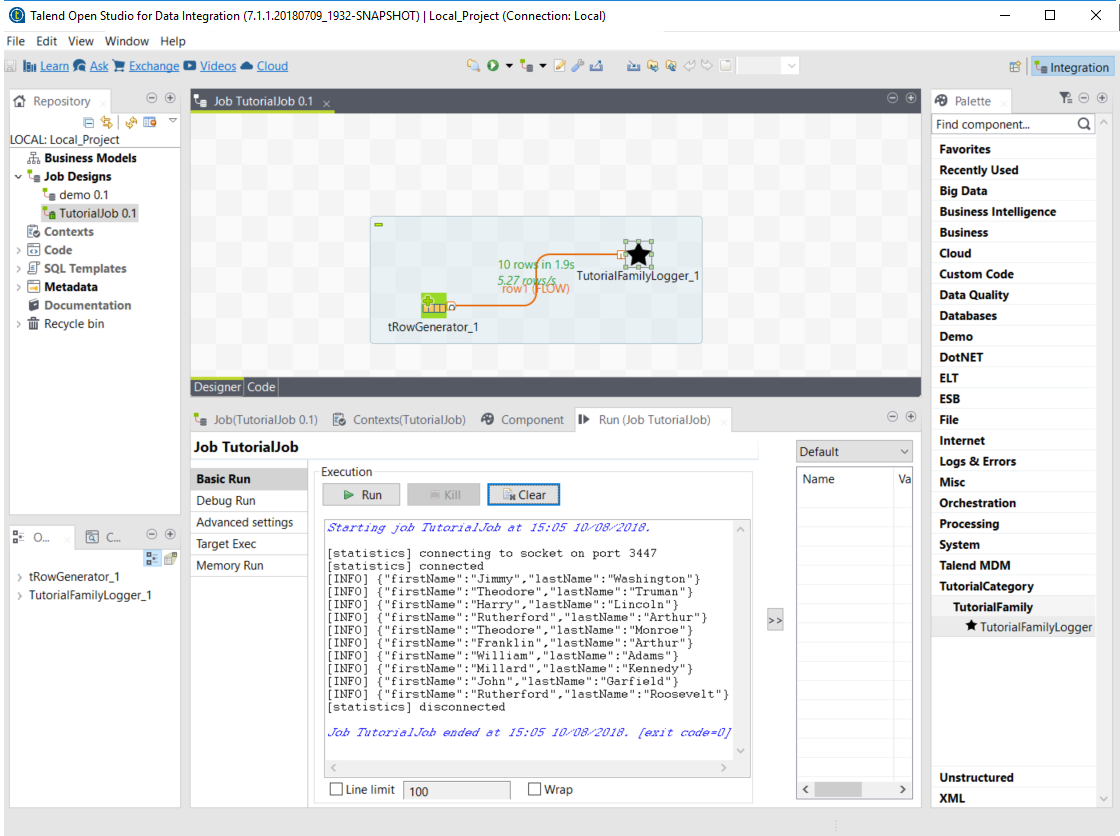

Building a job with the component

As the component is now ready to be used, it is time to create a job and check that it behaves as intended.

-

Open Talend Open Studio again and go to the job created earlier. The new component is still there.

-

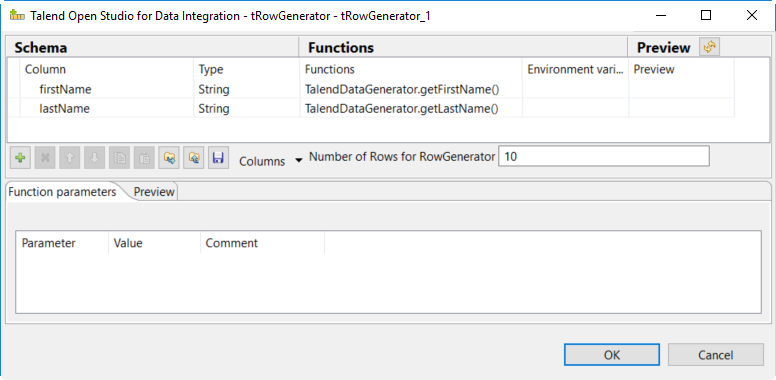

Add a tRowGenerator component and connect it to the logger.

-

Double-click the tRowGenerator to specify the data to generate:

-

Add a first column named

firstNameand select the *TalendDataGenerator.getFirstName() function. -

Add a second column named 'lastName' and select the *TalendDataGenerator.getLastName() function.

-

Set the Number of Rows for RowGenerator to

10.

-

-

Validate the tRowGenerator configuration.

-

Open the TutorialFamilyLogger component and set the level field to

info.

-

Go to the Run tab of the job and run the job.

The job is executed. You can observe in the console that each of the 10 generated rows is logged, and that theinfovalue entered in the logger is also displayed with each record, in uppercase.

Setting up your environment

Before being able to develop components using Talend Component Kit, you need the right system configuration and tools.

Although Talend Component Kit comes with some embedded tools, such as Maven and Gradle wrappers, you still need to prepare your system. A Talend Component Kit plugin for IntelliJ is also available and allows to design and generate your component project right from IntelliJ.

System prerequisites

In order to use Talend Component Kit, you need the following tools installed on your machine:

-

Java JDK 1.8.x. You can download it from Oracle website.

-

Talend Open Studio 7.0 or greater to integrate your components.

-

A Java Integrated Development Environment such as Eclipse or IntelliJ. IntelliJ is recommended as a Talend Component Kit plugin is available.

-

Optional: If you use IntelliJ, you can install the Talend Component Kit plugin for IntelliJ.

-

Optional: A build tool:

-

Apache Maven 3.5.4 is recommended to develop a component or the project itself. You can download it from Apache Maven website.

-

You can also use Gradle, but at the moment certain features are not supported, such as validations.

It is optional to install a build tool independently since Maven and Gradle wrappers are already available with Talend Component Kit.

-

Installing the Talend Component Kit IntelliJ plugin

The Talend Component Kit IntelliJ plugin is a plugin for the IntelliJ Java IDE. It adds support for the Talend Component Kit project creation.

Main features:

-

Project generation support.

-

Internationalization completion for component configuration.

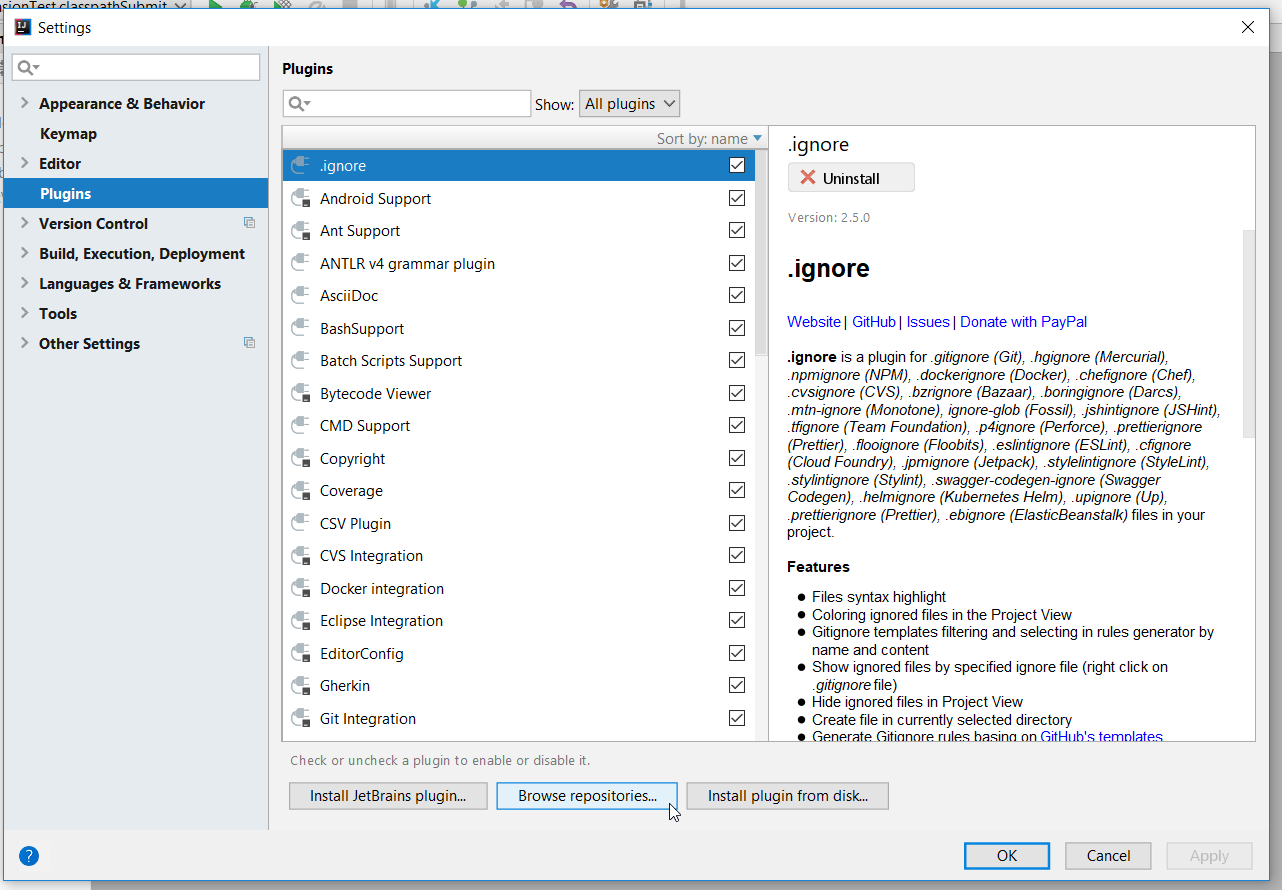

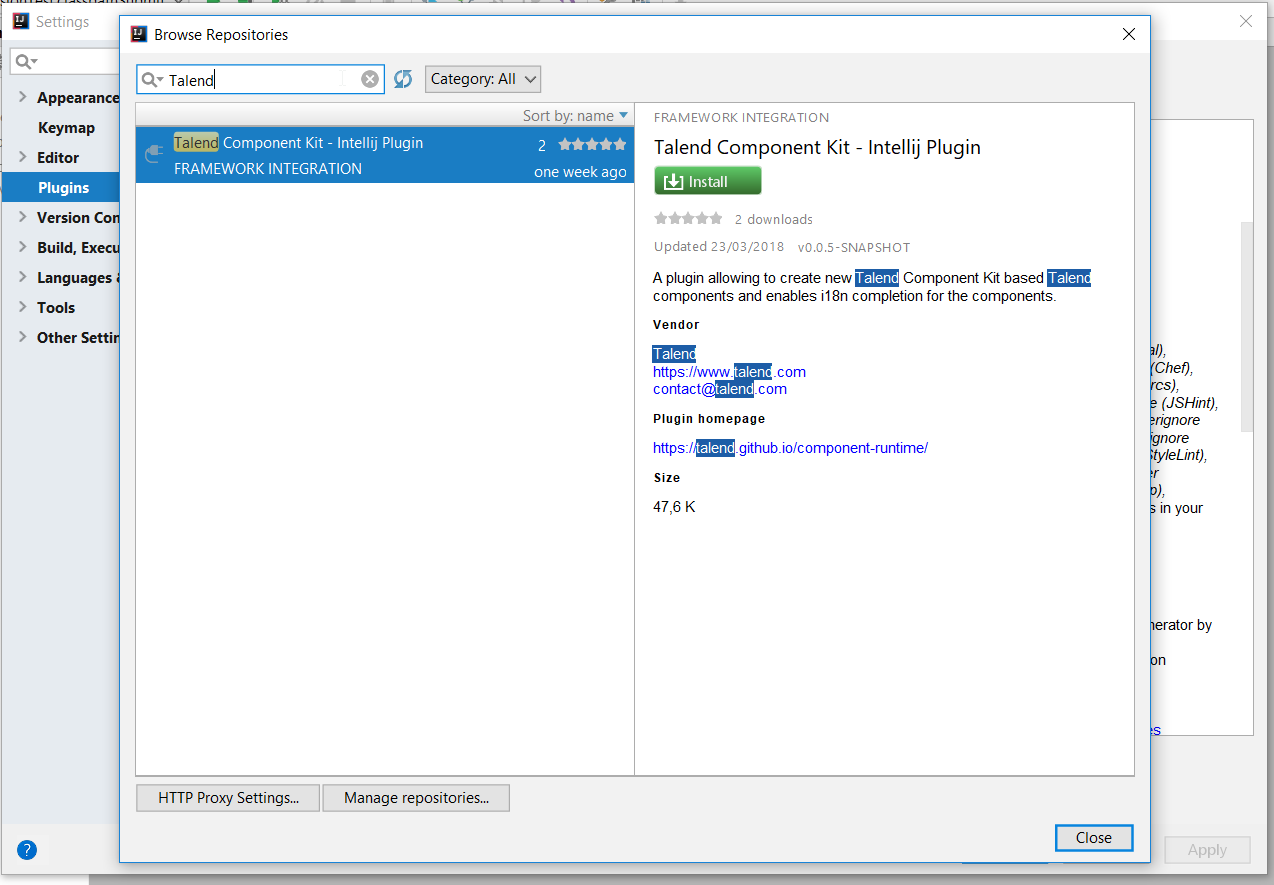

Installing the IntelliJ plugin

In the Intellij IDEA:

-

Go to File > Settings…

-

On the left panel, select Plugins.

-

Select Browse repositories…

-

Enter

Talendin the search field and chooseTalend Component Kit - Intellij Plugin. -

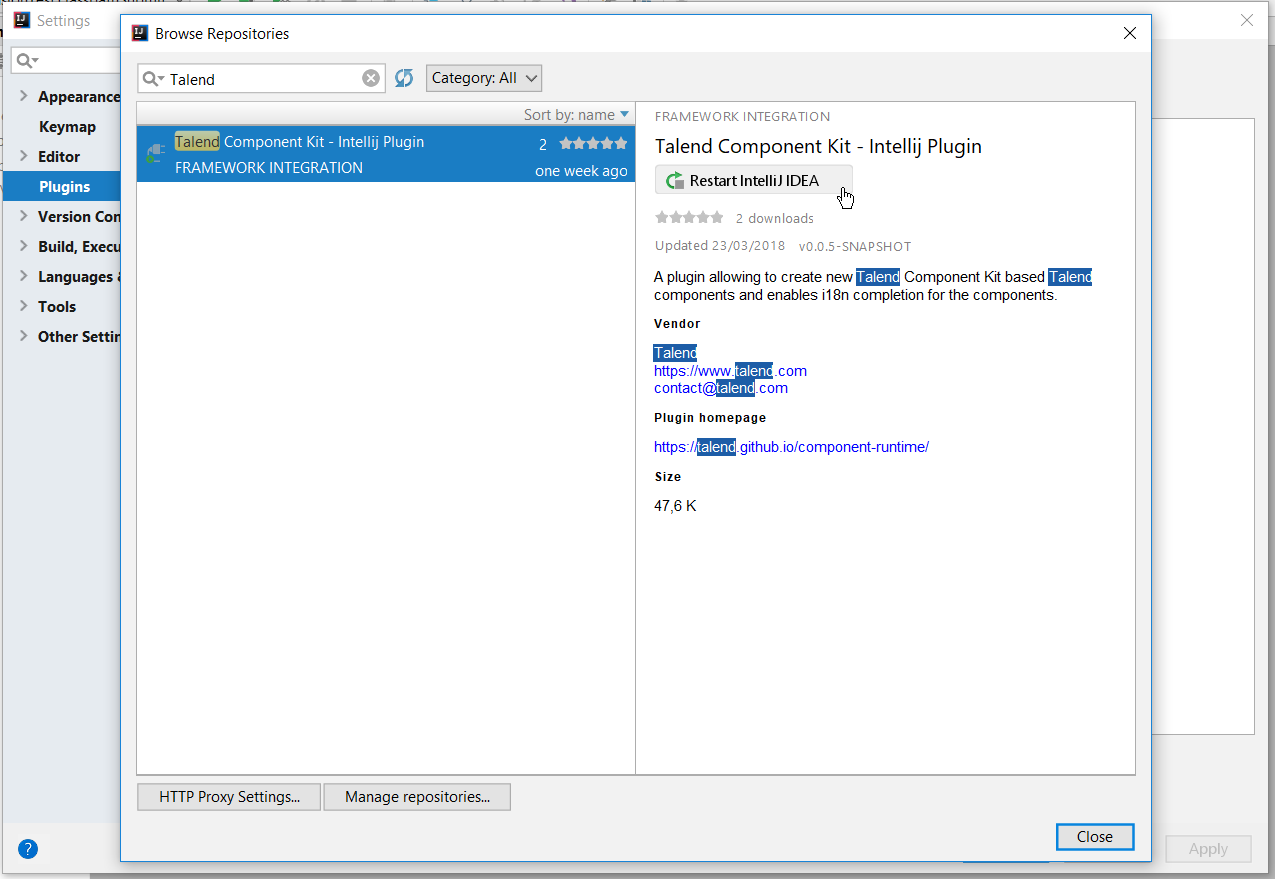

Select Install on the right.

-

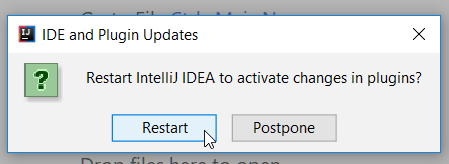

Click the Restart IntelliJ IDEA button.

-

Confirm the IDEA restart to complete the installation.

The plugin is now installed on your IntelliJ IDEA. You can start using it.

About the internationalization completion

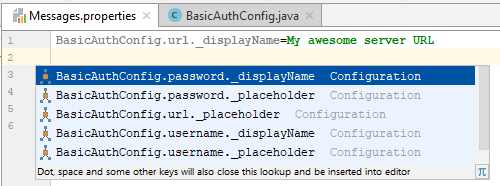

The plugin offers auto-completion for the configuration internationalization. The Talend component configuration lets you setup translatable and user-friendly labels for your configuration using a property file. Auto-completion in possible for the configuration keys and default values in the property file.

For example, you can internationalize a simple configuration class for a basic authentication that you use in your component:

@Checkable("basicAuth")

@DataStore("basicAuth")

@GridLayout({

@GridLayout.Row({ "url" }),

@GridLayout.Row({ "username", "password" }),

})

public class BasicAuthConfig implements Serializable {

@Option

private String url;

@Option

private String username;

@Option

@Credential

private String password;

}This configuration class contains three properties which you can attach a user-friendly label to.

For example, you can define a label like My server URL for the url option:

-

Create a

Messages.propertiesfile in the project resources and add the label to that file. The plugin automatically detects your configuration and provides you with key completion in the property file. -

Press Ctrl+Space to see the key suggestions.

Generating a project

The first step when developing new components is to create a project that will contain the skeleton of your components and set you on the right track.

The project generation can be achieved using the Talend Component Kit Starter or the Talend Component Kit plugin for IntelliJ.

Through a user-friendly interface, you can define the main lines of your project and of your component(s), including their name, family, type, configuration model, and so on.

Once completed, all the information filled are used to generate a project that you will use as starting point to implement the logic and layout of your components, and to iterate on them.

Once your project is generated, you can start implementing the component logic.

Generating a project using the Component Kit starter

The Component Kit starter lets you design your components configuration and generates a ready-to-implement project structure.

This tutorial shows you how to use the Component Kit starter to generate new components for MySQL databases. Before starting, make sure that you have correctly setup your environment. See this section.

Configuring the project

Before being able to create components, you need to define the general settings of the project:

-

Create a folder on your local machine to store the resource files of the component you want to create. For example,

C:/my_components. -

Open the starter in the web browser of your choice.

-

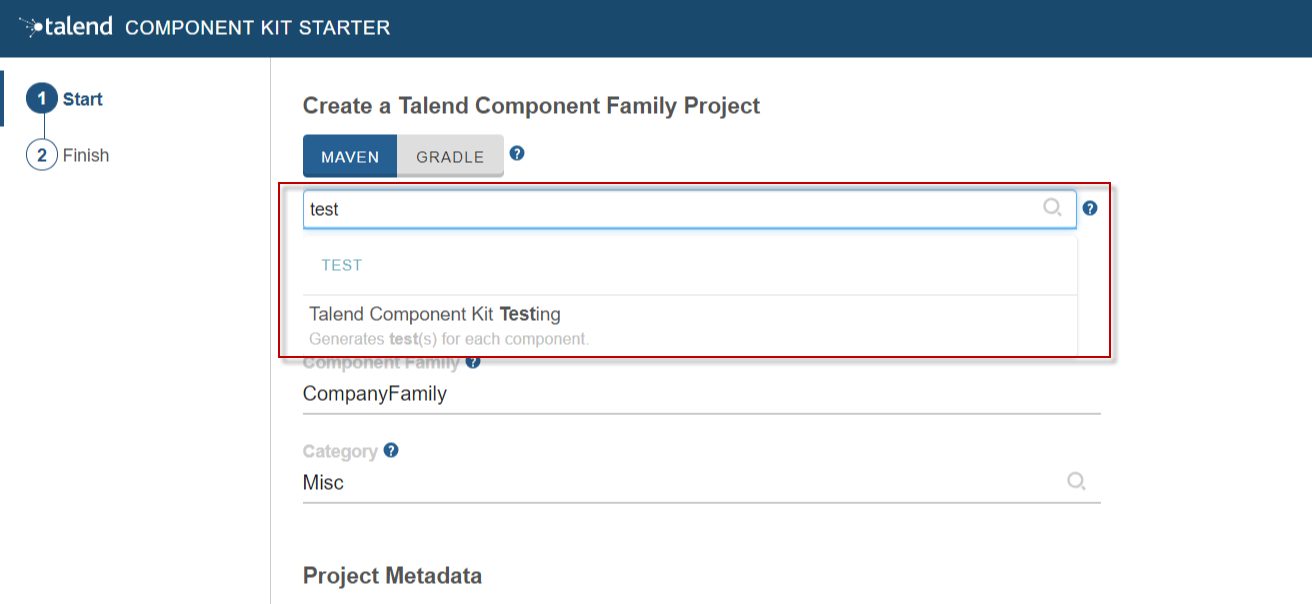

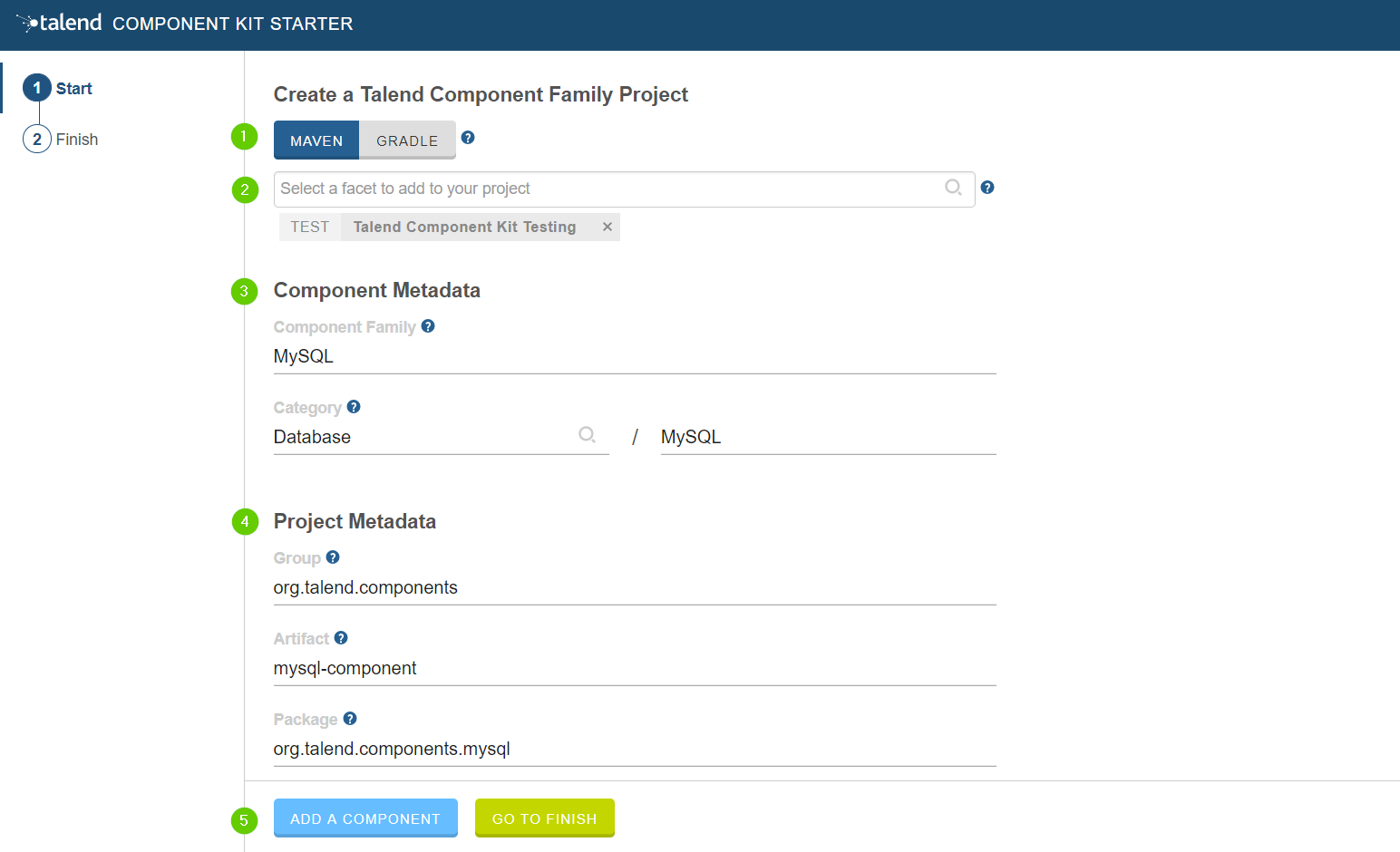

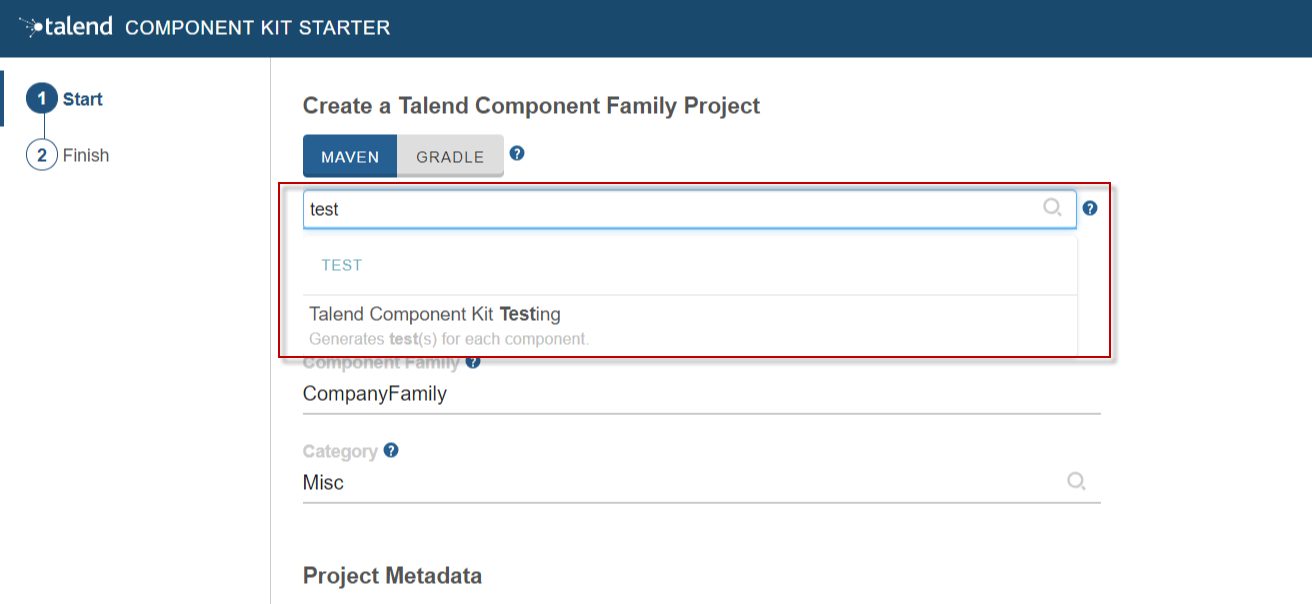

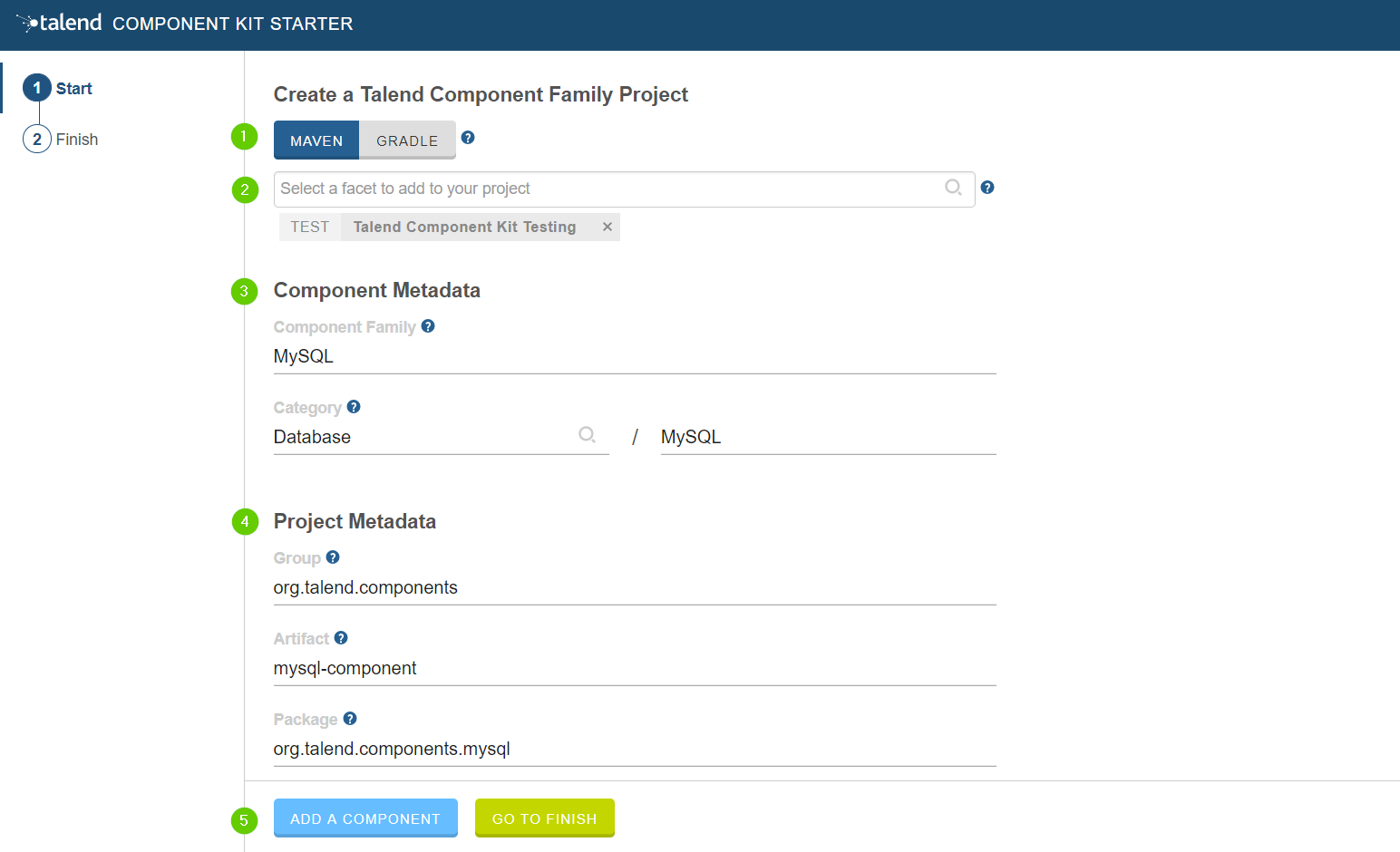

Select your build tool. This tutorial uses Maven, but you can select Gradle instead.

-

Add the Talend Component Kit Testing facet to your project to automatically generate unit tests for the components created later in this tutorial. Add any facet you need.

-

Enter the Component Family of the components you want to develop in the project. This name must be a valid java name and is recommended to be capitalized, for example 'MySQL'.

Once you have implemented your components in the Studio, this name is displayed in the Palette to group all of the MySQL-related components you develop, and is also part of your component name. -

Select the Category of the components you want to create in the current project. As MySQL is a kind of database, select Databases in this tutorial.

This Databases category is used and displayed as the parent family of the MySQL group in the Palette of the Studio. -

Complete the project metadata by entering the Group, Artifact and Package.

-

Click the ADD A COMPONENT button to start designing your components.

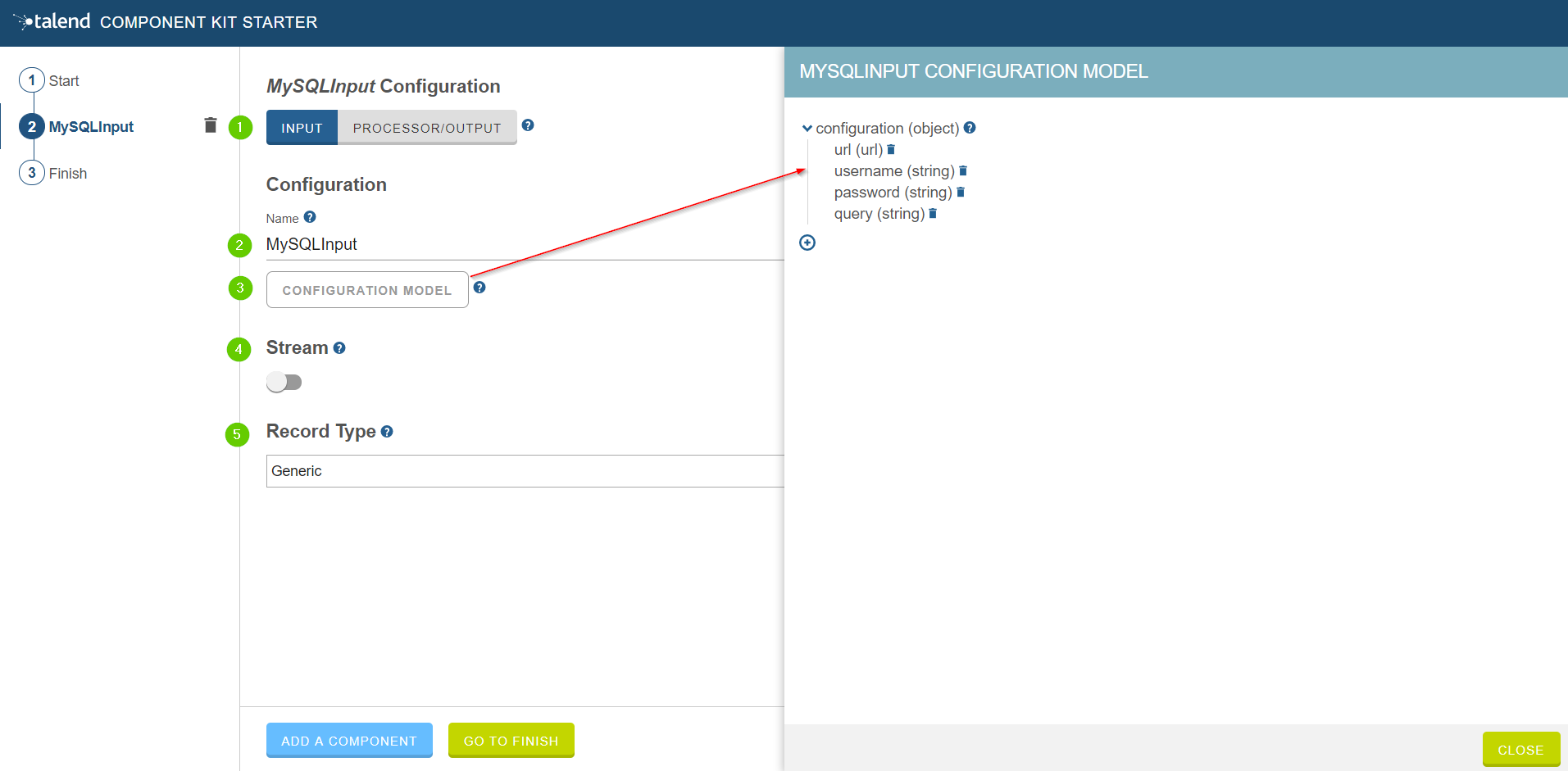

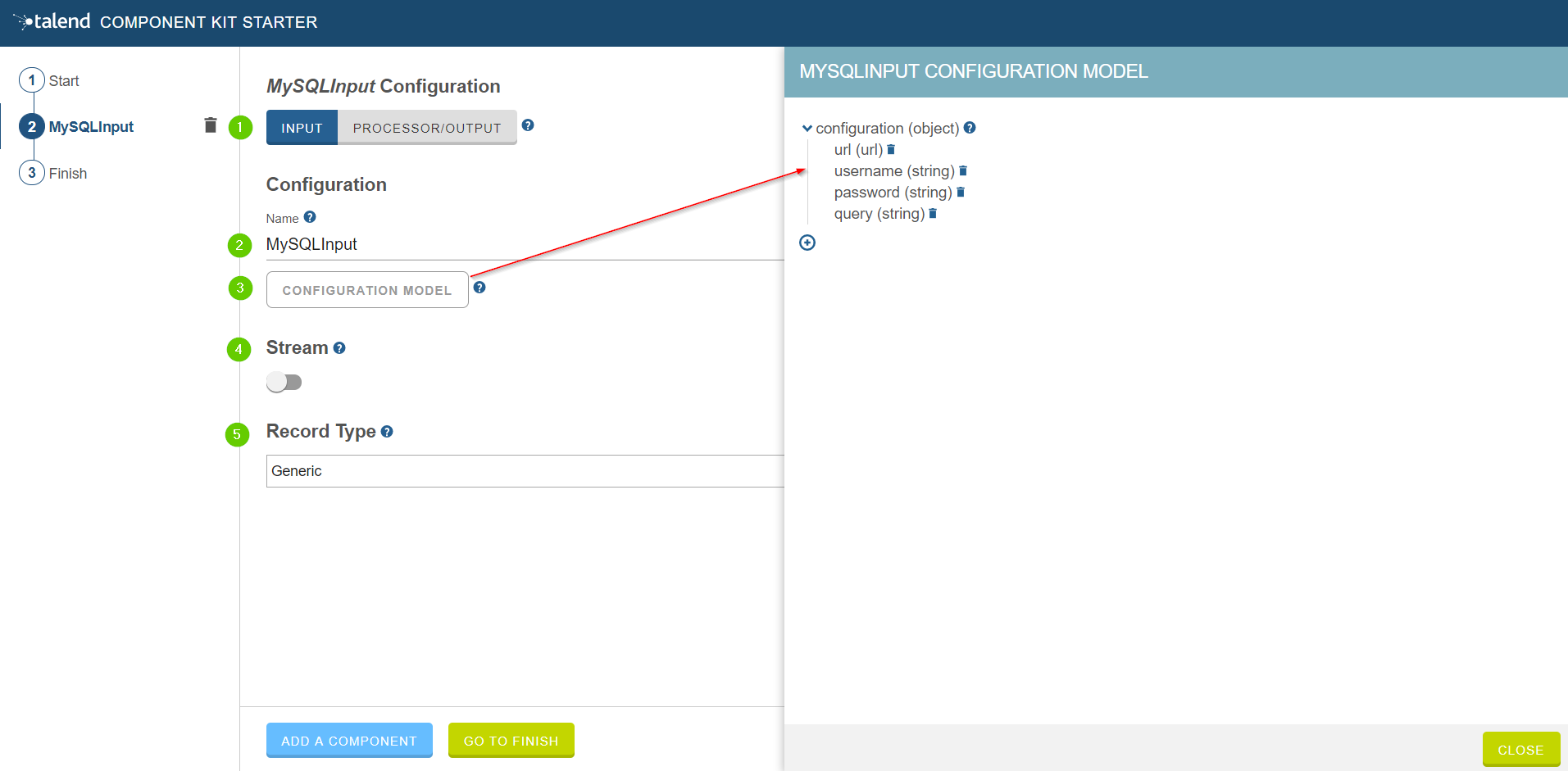

Creating an Input component

When clicking ADD A COMPONENT in the starter, a new step that allows you to define a new component is created in your project.

The intent in this tutorial is to create an input component that connects to a MySQL database, executes a SQL query and gets the result.

-

Choose the component type. INPUT in this case.

-

Enter the component name. For example, MySQLInput.

-

Click CONFIGURATION MODEL. This button lets you specify the required configuration for the component.

-

For each parameter that you need to add, click the (+) button on the right panel. Enter the name and choose the type of the parameter, then click the tick button to save the changes.

In this tutorial, to be able to execute a SQL query on the Input MySQL database, the configuration requires the following parameters:+-

a connection URL (string)

-

a username (string)

-

a password (string)

-

the SQL query to be executed (string).

Closing the configuration panel on the right does not delete your configuration.

-

-

Specify whether the component issues a stream or not. In this tutorial, the MySQL input component created is an ordinary (non streaming) component. In this case, let the toggle button disabled.

-

Select the Record Type generated by the component. In this tutorial, select Generic because the component is designed to generate JSON records.

You can also select Custom to define a POJO that represents your records.

Your input component is now defined. You can add another component or generate and download your project.

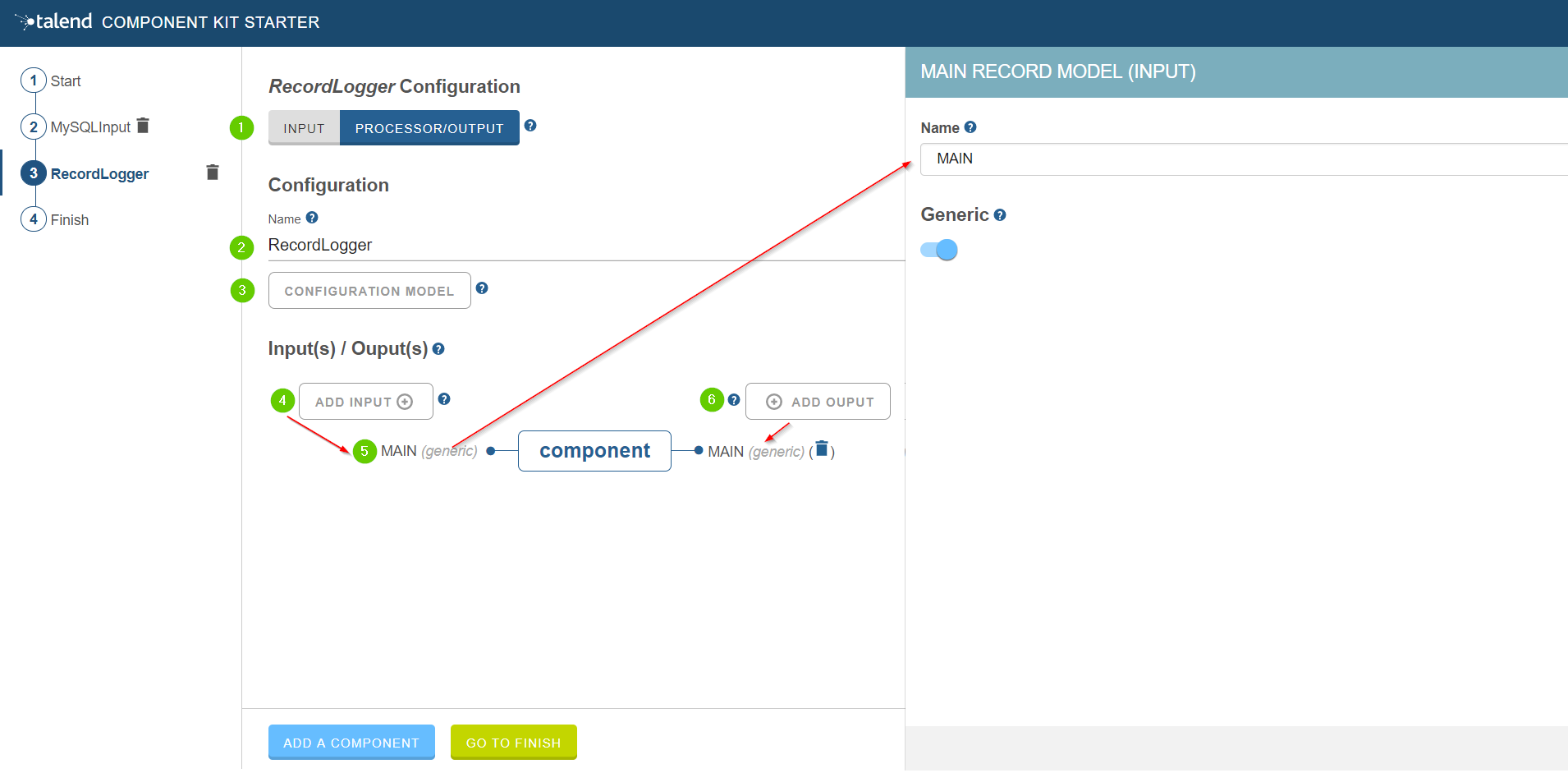

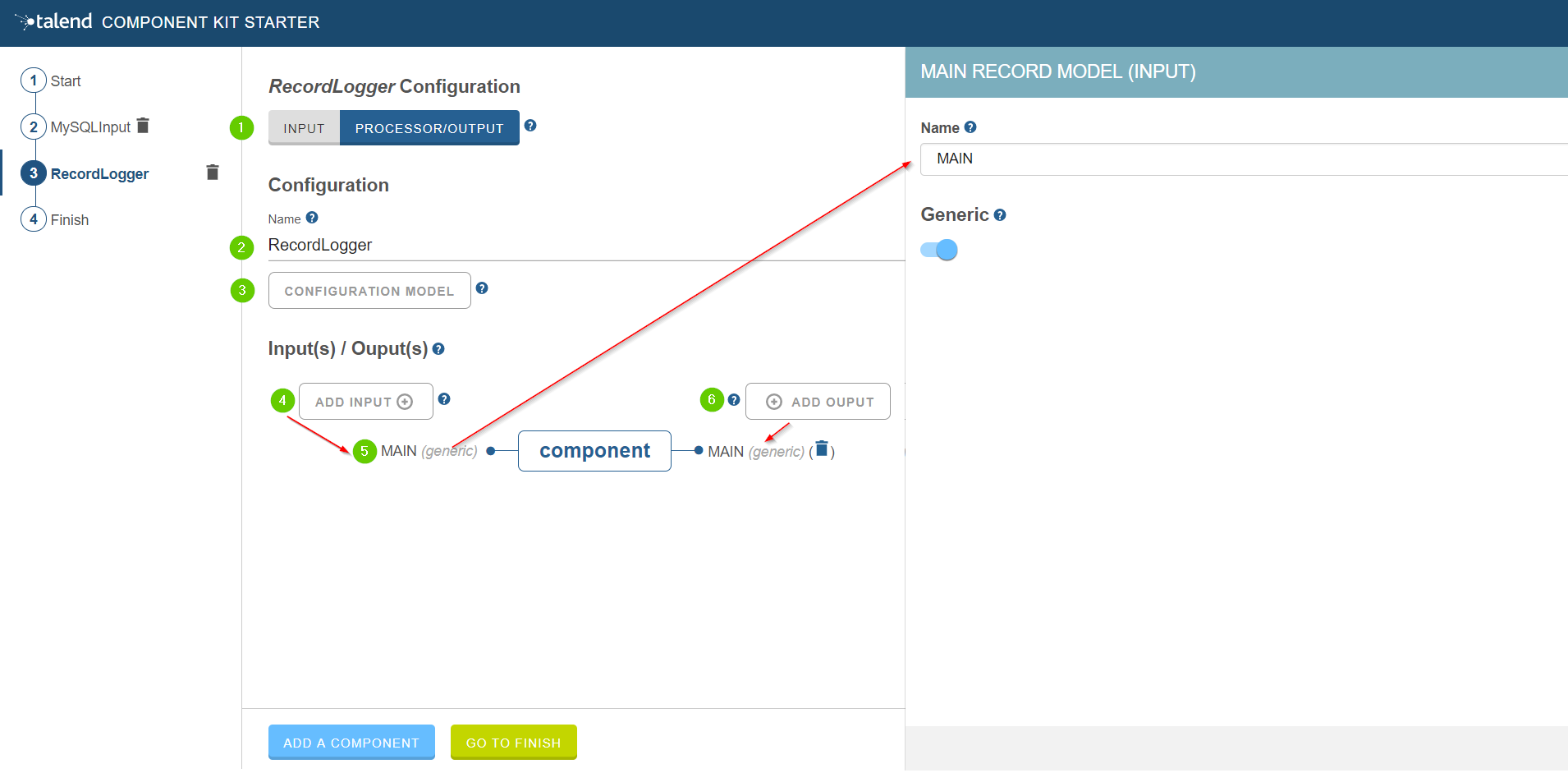

Creating a Processor component

When clicking ADD A COMPONENT in the starter, a new step that allows you to define a new component is created in your project.

The intent in this tutorial is to create a simple processor component that receives a record, logs it and returns it at it is.

-

Choose the component type. PROCESSOR/OUTPUT in this case.

-

Enter the component name. For example, RecordLogger, as the processor created in this tutorial logs the records.

-

Specify the CONFIGURATION MODEL of the component. In this tutorial, the component doesn’t need any specific configuration. Skip this step.

-

Define the Input(s) of the component. For each input that you need to define, click ADD INPUT. In this tutorial, only one input is needed to receive the record to log.

-

Click the input name to access its configuration. You can change the name of the input and define its structure using a POJO. If you added several inputs, repeat this step for each one of them.

The input in this tutorial is a generic record. Enable the Generic option. -

Define the Output(s) of the component. For each output that you need to define, click ADD OUTPUT. In this tutorial, only one generic output is needed to return the received record.

Outputs can be configured the same way as inputs (see previous steps). -

Make sure to check the configuration of inputs and outputs as they are not set to Generic by default.

Your processor component is now defined. You can add another component or generate and download your project.

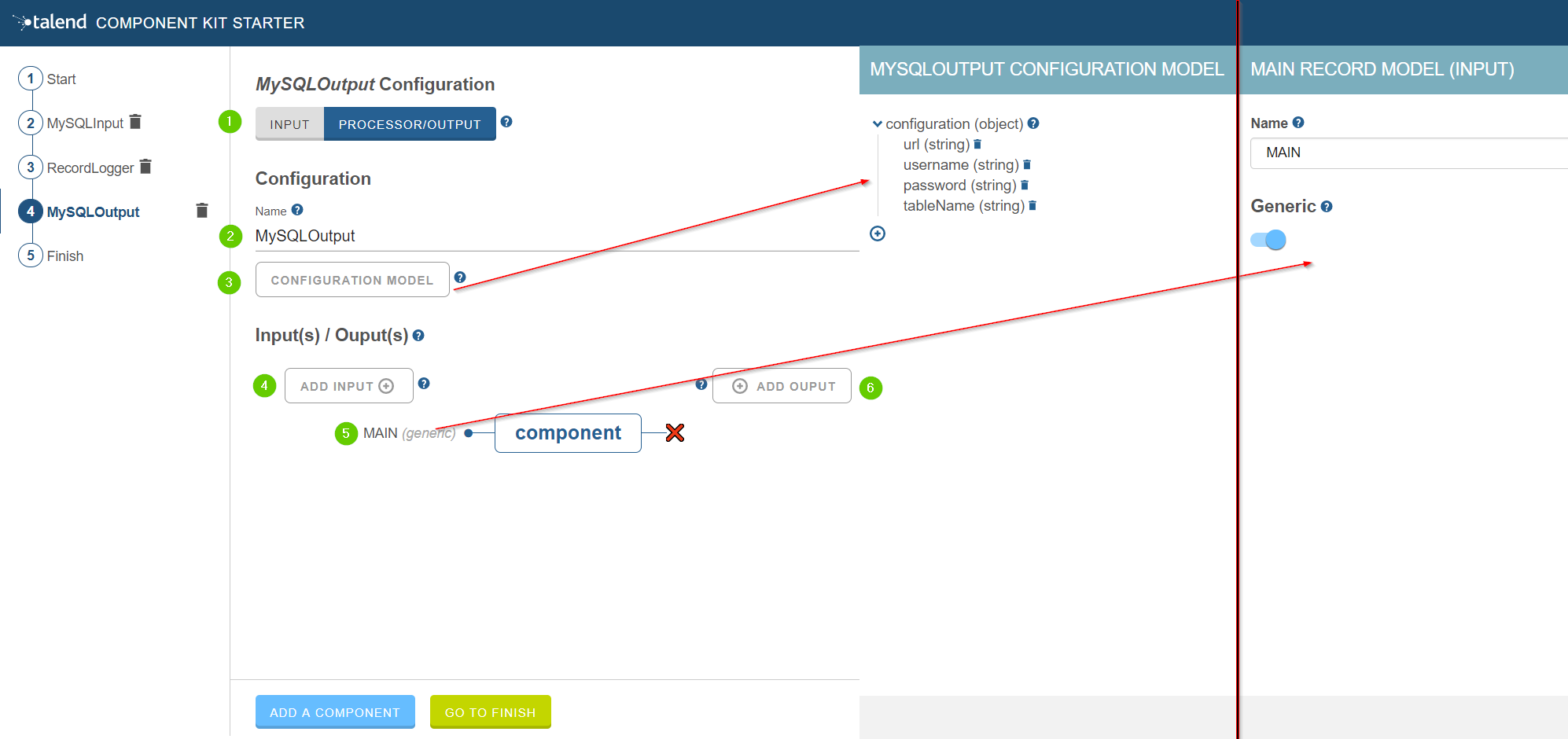

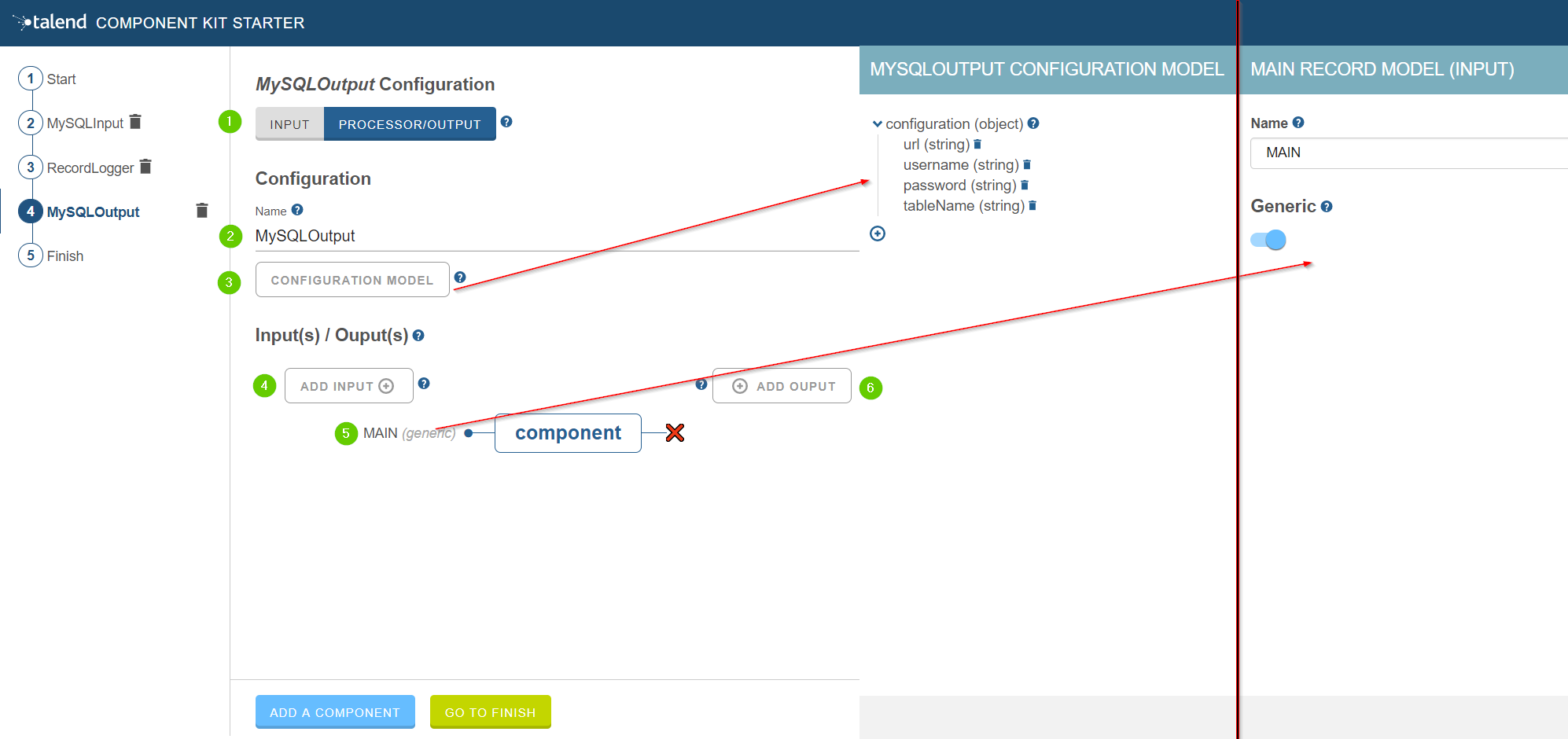

Creating an Output component

When clicking ADD A COMPONENT in the starter, a new step that allows you to define a new component is created in your project.

The intent in this tutorial is to create an output component that receives a record and inserts it into a MySQL database table.

| Output components are Processors without any output. In other words, the output is a processor that does not produce any records. |

-

Choose the component type. PROCESSOR/OUTPUT in this case.

-

Enter the component name. For example, MySQLOutput.

-

Click CONFIGURATION MODEL. This button lets you specify the required configuration for the component.

-

For each parameter that you need to add, click the (+) button on the right panel. Enter the name and choose the type of the parameter, then click the tick button to save the changes.

In this tutorial, to be able to insert a record in the output MySQL database, the configuration requires the following parameters:+-

a connection URL (string)

-

a username (string)

-

a password (string)

-

the name of the table to insert the record in (string).

Closing the configuration panel on the right does not delete your configuration.

-

-

Define the Input(s) of the component. For each input that you need to define, click ADD INPUT. In this tutorial, only one input is needed.

-

Click the input name to access its configuration. You can change the name of the input and define its structure using a POJO. If you added several inputs, repeat this step for each one of them.

The input in this tutorial is a generic record. Enable the Generic option. -

Do not create any output because the component does not produce any record. This is the only difference between an output an a processor component.

Your output component is now defined. You can add another component or generate and download your project.

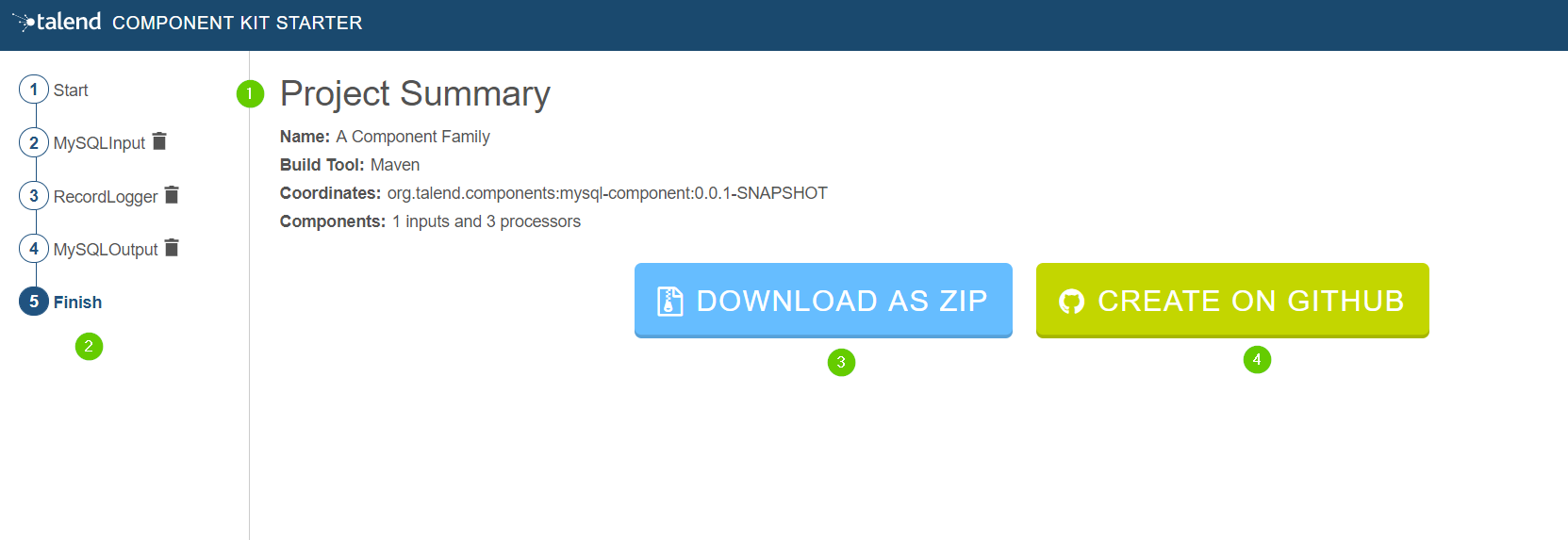

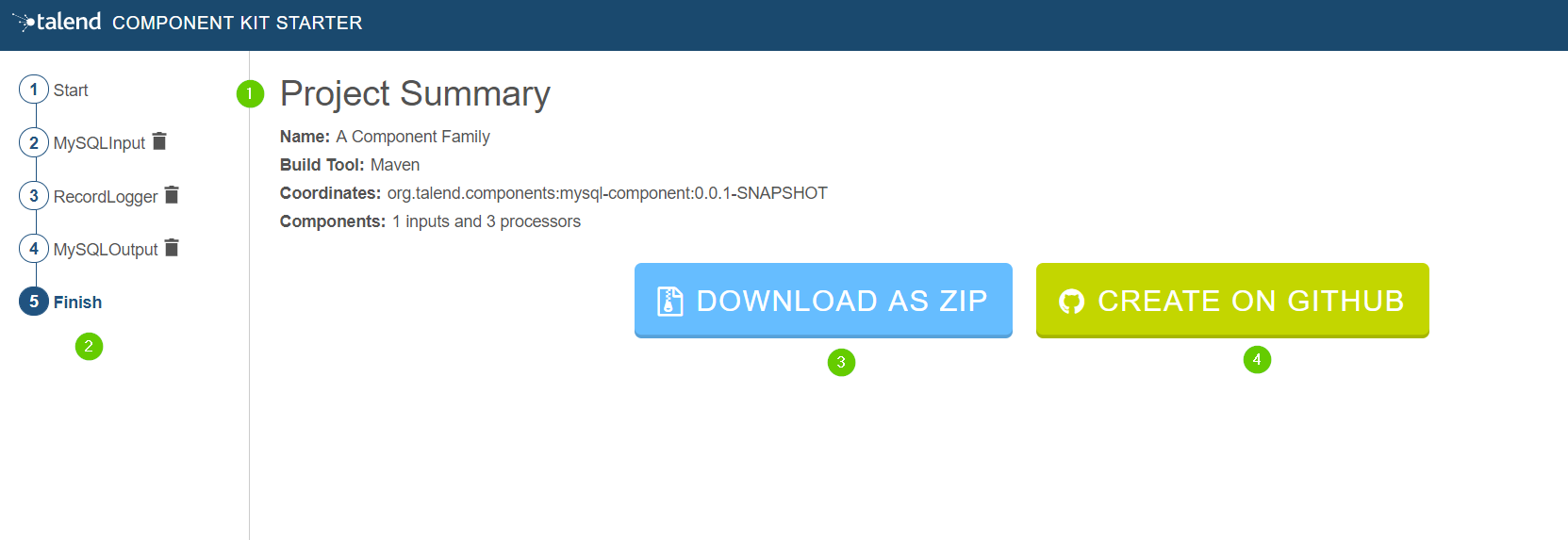

Generating and downloading the final project

Once your project is configured and all the components you need are created, you can generate and download the final project. In this tutorial, the project was configured and three components of different types (input, processor and output) have been defined.

-

Click GO TO FINISH at the bottom of the page. You are redirected to a page that summarizes the project. On the left panel, you can also see all the components that you added to the project.

-

Generate the project using one of the two options available:

-

Download it locally as a ZIP file using the DOWNLOAD AS ZIP button.

-

Create a GitHub repository and push the project to it using the CREATE ON GITHUB button.

-

In this tutorial, the project is downloaded to the local machine as a ZIP file.

Compiling and exploring the generated project files

Once the package is available on your machine, you can compile it using the build tool selected when configuring the project.

-

In the tutorial, Maven is the build tool selected for the project.

In the project directory, execute themvn packagecommand.

If you don’t have Maven installed on your machine, you can use the Maven wrapper provided in the generated project, by executing the./mvnw packagecommand. -

If you have created a Gradle project, you can compile it using the

gradle buildcommand or using the Gradle wrapper:./gradlew build.

The generated project code contains documentation that can guide and help you implementing the component logic. Import the project to your favorite IDE to start the implementation.

The next tutorial shows how to implement an Input component in details.

Generating a project using IntelliJ plugin

Once the plugin installed, you can generate a component project.

-

Select File > New > Project.

-

In the New Project wizard, choose Talend Component and click Next.

The plugin loads the component starter and lets you design your components. For more information about the Talend Component Kit starter, check this tutorial.

-

Once your project is configured, select Next, then click Finish.

The project is automatically imported into the IDEA using the build tool that you have chosen.

Implementing components

Once you have generated a project, you can start implementing the logic and layout of your components and iterate on it. Depending on the type of component you want to create, the logic implementation can differ. However, the layout and component metadata are defined the same way for all types of components in your project. The main steps are:

In some cases, you will require specific implementations to handle more advanced cases, such as:

You can also make certain configurations reusable across your project by defining services. Using your Java IDE along with a build tool supported by the framework, you can then compile your components to test and deploy them to Talend Studio or other Talend applications:

In any case, follow these best practices to ensure the components you develop are optimized.

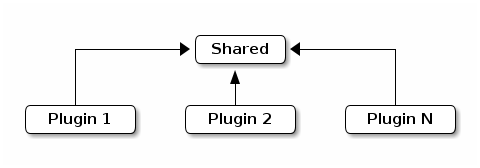

You can also learn more about component loading and plugins here:

Registering components

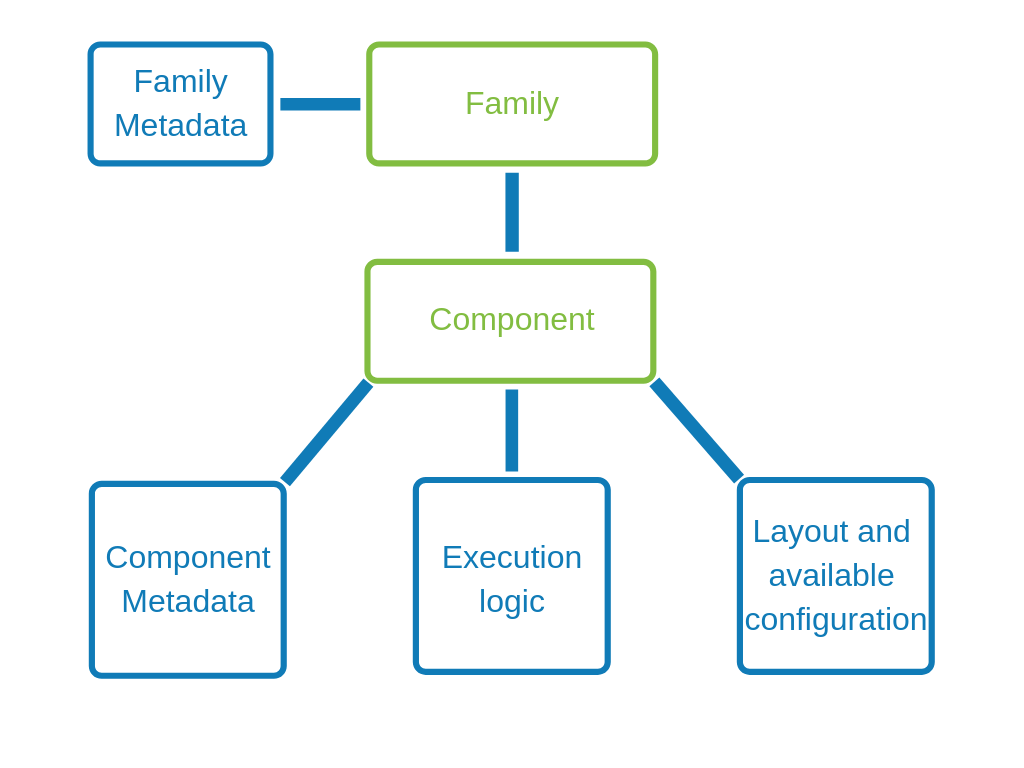

Before implementing a component logic and configuration, you need to specify the family and the category it belongs to, the component type and name, as well as its name and a few other generic parameters. This set of metadata, and more particularly the family, categories and component type, is mandatory to recognize and load the component to Talend Studio or Cloud applications.

Some of these parameters are handled at the project generation using the starter, but can still be accessed and updated later on.

Component family and categories

The family and category of a component is automatically written in the package-info.java of the component package, using the @Components annotation. By default, these parameters are already configured in this file when you import your project in your IDE. Their value correspond to what was defined during the project definition with the starter.

Multiple components can share the same family and category value, but the family + name pair must be unique for the system.

A component can belong to one family only and to one or several categories. If not specified, the category defaults to Misc.

The package-info.java also defines the component family icon, which is different from the component icon. You can learn how to customize this icon in this section.

Here is a sample package-info.java:

@Components(name = "my_component_family", categories = "My Category")

package org.talend.sdk.component.sample;

import org.talend.sdk.component.api.component.Components;Another example with an existing component:

@Components(name = "Salesforce", categories = {"Business", "Cloud"})

package org.talend.sdk.component.sample;

import org.talend.sdk.component.api.component.Components;Component icon and version

Components can require metadata to be integrated in Talend Studio or Cloud platforms.

Metadata is set on the component class and belongs to the org.talend.sdk.component.api.component package.

When you generate your project and import it in your IDE, icon and version both come with a default value.

-

@Icon: Sets an icon key used to represent the component. You can use a custom key with the

custom()method but the icon may not be rendered properly. The icon defaults to Star.

Learn how to set a custom icon for your component in this section. -

@Version: Sets the component version. 1 by default.

Learn how to manage different versions and migrations between your component versions in this section.

@Version(1)

@Icon(FILE_XML_O)

@PartitionMapper(name = "jaxbInput")

public class JaxbPartitionMapper implements Serializable {

// ...

}Defining a custom icon for a component or component family

Every component family and component needs to have a representative icon.

You can use one of the icons provided by the framework or you can use a custom icon.

-

For the component family the icon is defined in the

package-info.javafile. -

For the component itself, you need to declare the icon in the component class.

To use a custom icon, you need to have the icon file placed in the resources/icons folder of the project.

The icon file needs to have a name following the convention IconName_icon32.png, where you can replace IconName by the name of your choice.

@Icon(value = Icon.IconType.CUSTOM, custom = "IconName")Defining an input component logic

Input components are the components generally placed at the beginning of a Talend job. They are in charge of retrieving the data that will later be processed in the job.

An input component is primarily made of three distinct logics: - The execution logic of the component itself, defined through a partition mapper. - The configurable part of the component, defined through the mapper configuration. - The source logic defined through a producer.

Before implementing the component logic and defining its layout and configurable fields, make sure you have specified its basic metadata, as detailed in this document.

Defining a partition mapper

What is a partition mapper

A PartitionMapper is a component able to split itself to make the execution more efficient.

This concept is borrowed from big data and useful in this context only (BEAM executions).

The idea is to divide the work before executing it in order to reduce the overall execution time.

The process is the following:

-

The size of the data you work on is estimated. This part can be heuristic and not very precise.

-

From that size, the execution engine (runner for Beam) requests the mapper to split itself in N mappers with a subset of the overall work.

-

The leaf (final) mapper is used as a

Producer(actual reader) factory.

This kind of component must be Serializable to be distributable.

|

Implementing a partition mapper

A partition mapper requires three methods marked with specific annotations:

-

@Assessorfor the evaluating method -

@Splitfor the dividing method -

@Emitterfor theProducerfactory

@Assessor

The Assessor method returns the estimated size of the data related to the component (depending its configuration).

It must return a Number and must not take any parameter.

For example:

@Assessor

public long estimateDataSetByteSize() {

return ....;

}@Split

The Split method returns a collection of partition mappers and can take optionally a @PartitionSize long value as parameter, which is the requested size of the dataset per sub partition mapper.

For example:

@Split

public List<MyMapper> split(@PartitionSize final long desiredSize) {

return ....;

}Defining the producer method

The Producer method defines the source logic of an input component. It handles the interaction with a physical source and produces input data for the processing flow.

A producer must have a @Producer method without any parameter. It is triggered by the @Emitter of the partition mapper and can return any data. It is defined in the <component_name>Source.java file:

@Producer

public MyData produces() {

return ...;

}Defining a processor or an output component logic

Processors and output components are the components in charge of reading, processing and transforming data in a Talend job, as well as passing it to its required destination.

Before implementing the component logic and defining its layout and configurable fields, make sure you have specified its basic metadata, as detailed in this document.

Defining a processor

What is a processor

A Processor is a component that converts incoming data to a different model.

A processor must have a method decorated with @ElementListener taking an incoming data and returning the processed data:

@ElementListener

public MyNewData map(final MyData data) {

return ...;

}Processors must be Serializable because they are distributed components.

If you just need to access data on a map-based ruleset, you can use JsonObject as parameter type.

From there, Talend Component Kit wraps the data to allow you to access it as a map. The parameter type is not enforced.

This means that if you know you will get a SuperCustomDto, then you can use it as parameter type. But for generic components that are reusable in any chain, it is highly encouraged to use JsonObject until you have an evaluation language-based processor that has its own way to access components.

For example:

@ElementListener

public MyNewData map(final JsonObject incomingData) {

String name = incomingData.getString("name");

int name = incomingData.getInt("age");

return ...;

}

// equivalent to (using POJO subclassing)

public class Person {

private String age;

private int age;

// getters/setters

}

@ElementListener

public MyNewData map(final Person person) {

String name = person.getName();

int age = person.getAge();

return ...;

}A processor also supports @BeforeGroup and @AfterGroup methods, which must not have any parameter and return void values. Any other result would be ignored.

These methods are used by the runtime to mark a chunk of the data in a way which is estimated good for the execution flow size.

Because the size is estimated, the size of a group can vary. It is even possible to have groups of size 1.

|

It is recommended to batch records, for performance reasons:

@BeforeGroup

public void initBatch() {

// ...

}

@AfterGroup

public void endBatch() {

// ...

}You can optimize the data batch processing by using the maxBatchSize parameter. This parameter is automatically implemented on the component when it is deployed to a Talend application. Only the logic needs to be implemented. Learn how to implement chunking/bulking in this document.

Defining multiple outputs

In some cases, you may need to split the output of a processor in two. A common example is to have "main" and "reject" branches where part of the incoming data are passed to a specific bucket to be processed later.

To do that, you can use @Output as replacement of the returned value:

@ElementListener

public void map(final MyData data, @Output final OutputEmitter<MyNewData> output) {

output.emit(createNewData(data));

}Alternatively, you can pass a string that represents the new branch:

@ElementListener

public void map(final MyData data,

@Output final OutputEmitter<MyNewData> main,

@Output("rejected") final OutputEmitter<MyNewDataWithError> rejected) {

if (isRejected(data)) {

rejected.emit(createNewData(data));

} else {

main.emit(createNewData(data));

}

}

// or

@ElementListener

public MyNewData map(final MyData data,

@Output("rejected") final OutputEmitter<MyNewDataWithError> rejected) {

if (isSuspicious(data)) {

rejected.emit(createNewData(data));

return createNewData(data); // in this case the processing continues but notifies another channel

}

return createNewData(data);

}Defining multiple inputs

Having multiple inputs is similar to having multiple outputs, except that an OutputEmitter wrapper is not needed:

@ElementListener

public MyNewData map(@Input final MyData data, @Input("input2") final MyData2 data2) {

return createNewData(data1, data2);

}@Input takes the input name as parameter. If no name is set, it defaults to the "main (default)" input branch. It is recommended to use the default branch when possible and to avoid naming branches according to the component semantic.

Implementing batch processing

Depending on several requirements, including the system capacity and business needs, a processor can process records differently.

For example, for real-time or near-real time processing, it is more interesting to process small batches of data more often. On the other hand, in case of one-time processing, it is more optimal to adapt the way the component handles batches of data according to the system capacity.

By default, the runtime automatically estimates a group size that it considers good, according to the system capacity, to process the data. This group size can sometimes be too big and not optimal for your needs or for your system to handle effectively and correctly.

Users can then customize this size from the component settings in Talend Studio, by specifying a maxBatchSize that adapts the size of each group of data to be processed.

| The estimated group size logic is automatically implemented when a component is deployed to a Talend application. Besides defining the @BeforeGroup and @AfterGroup logic detailed below, no action is required on the implementation side of the component. |

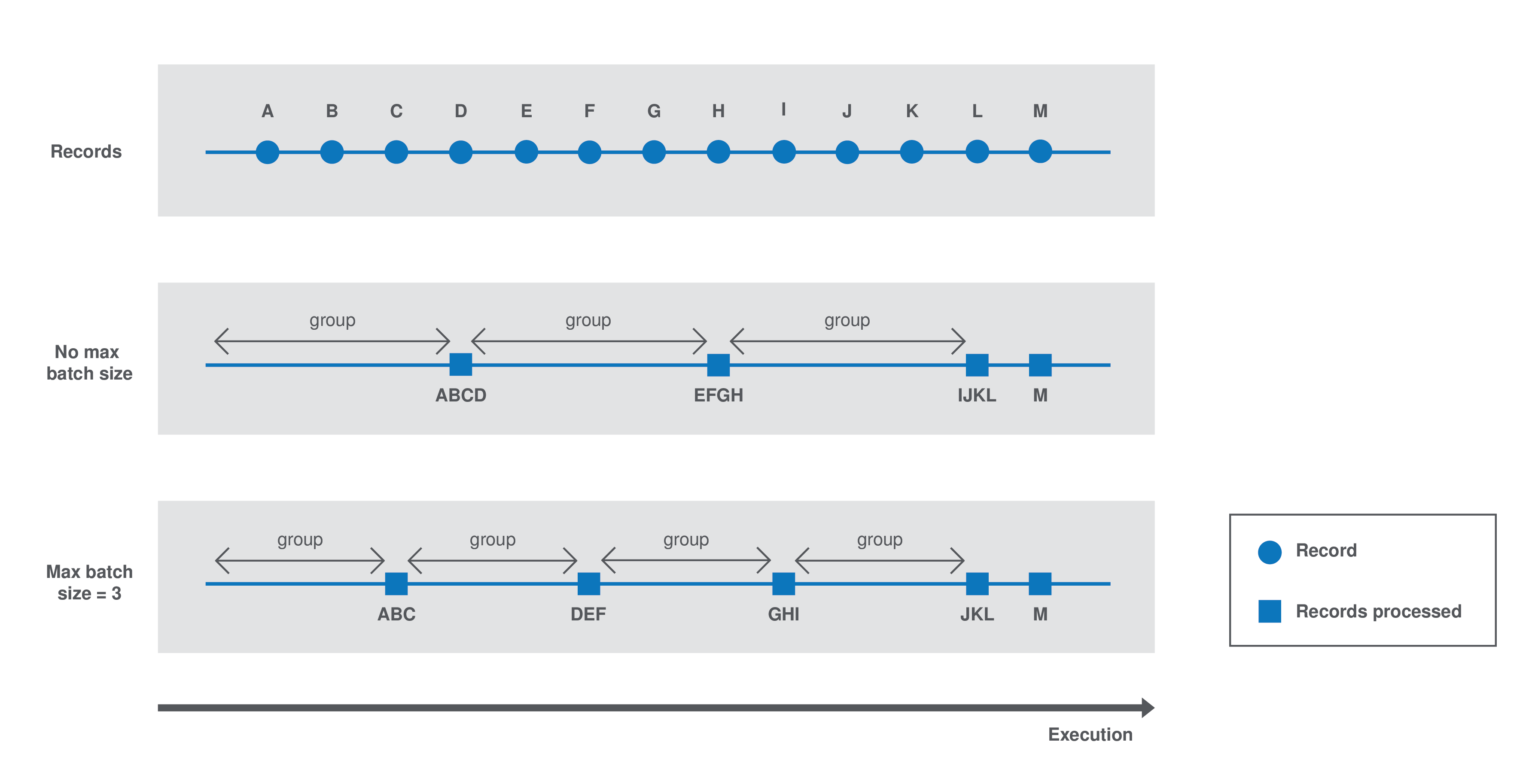

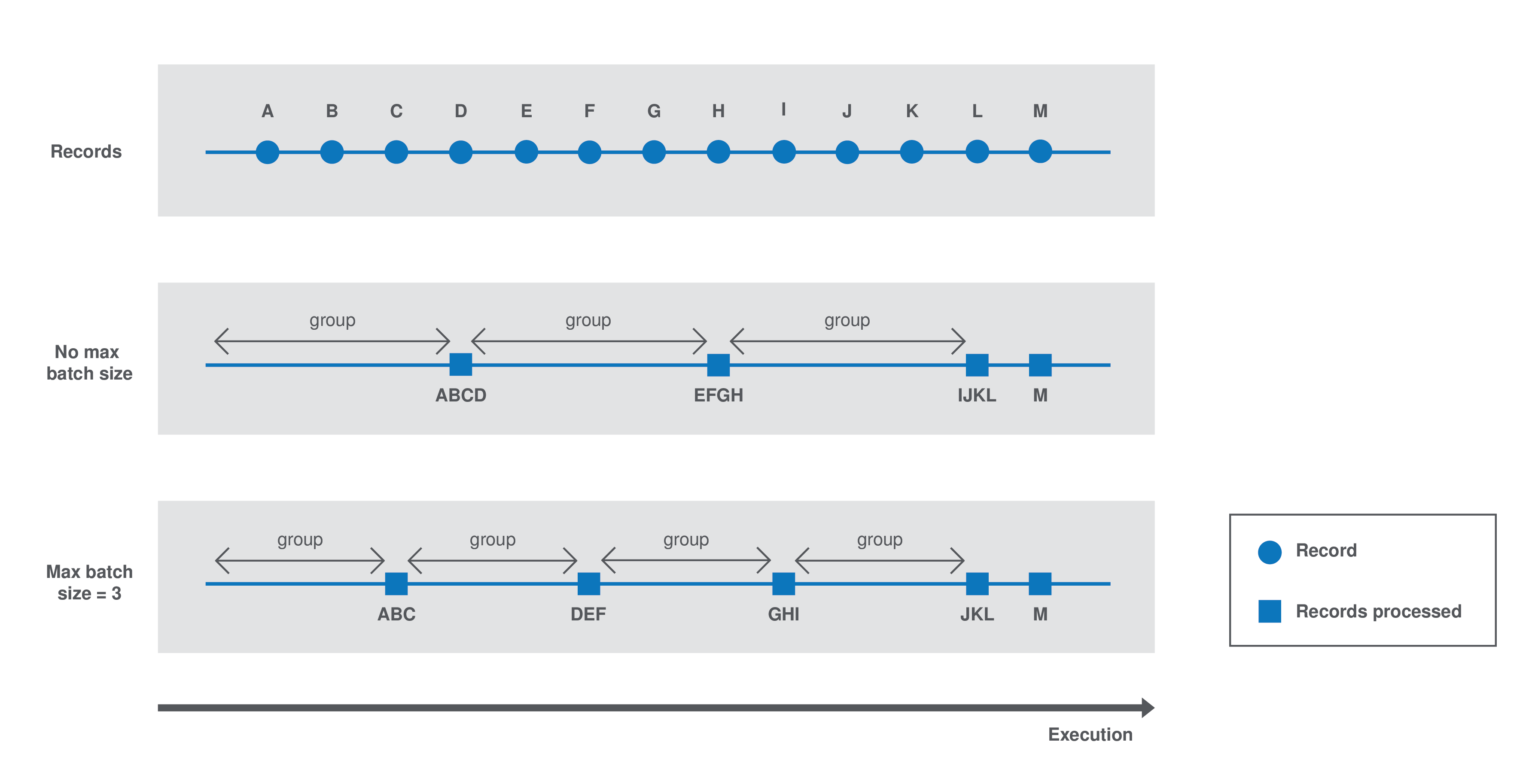

The component batch processes the data as follows:

-

Case 1 - No

maxBatchSizeis specified in the component configuration. The runtime estimates a group size of 4. Records are processed by groups of 4. -

Case 2 - The runtime estimates a group size of 4 but a

maxBatchSizeof 3 is specified in the component configuration. The system adapts the group size to 3. Records are processed by groups of 3.

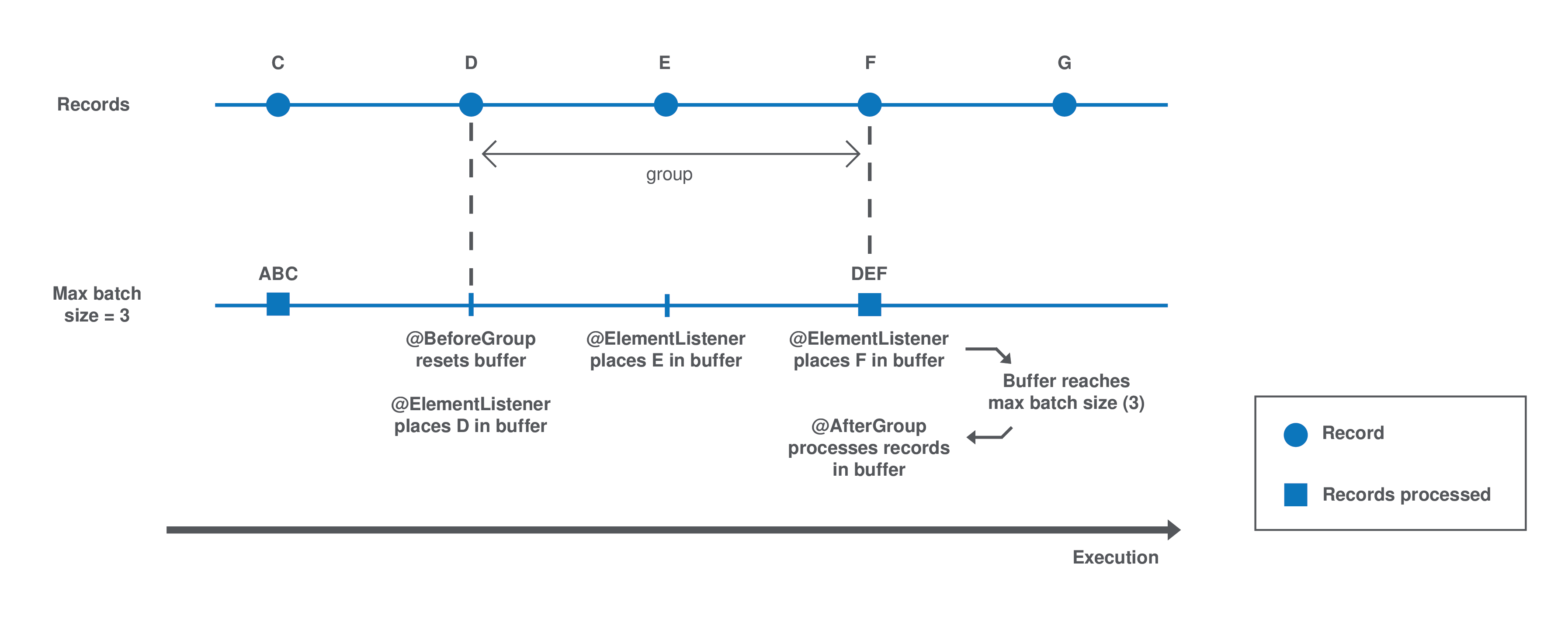

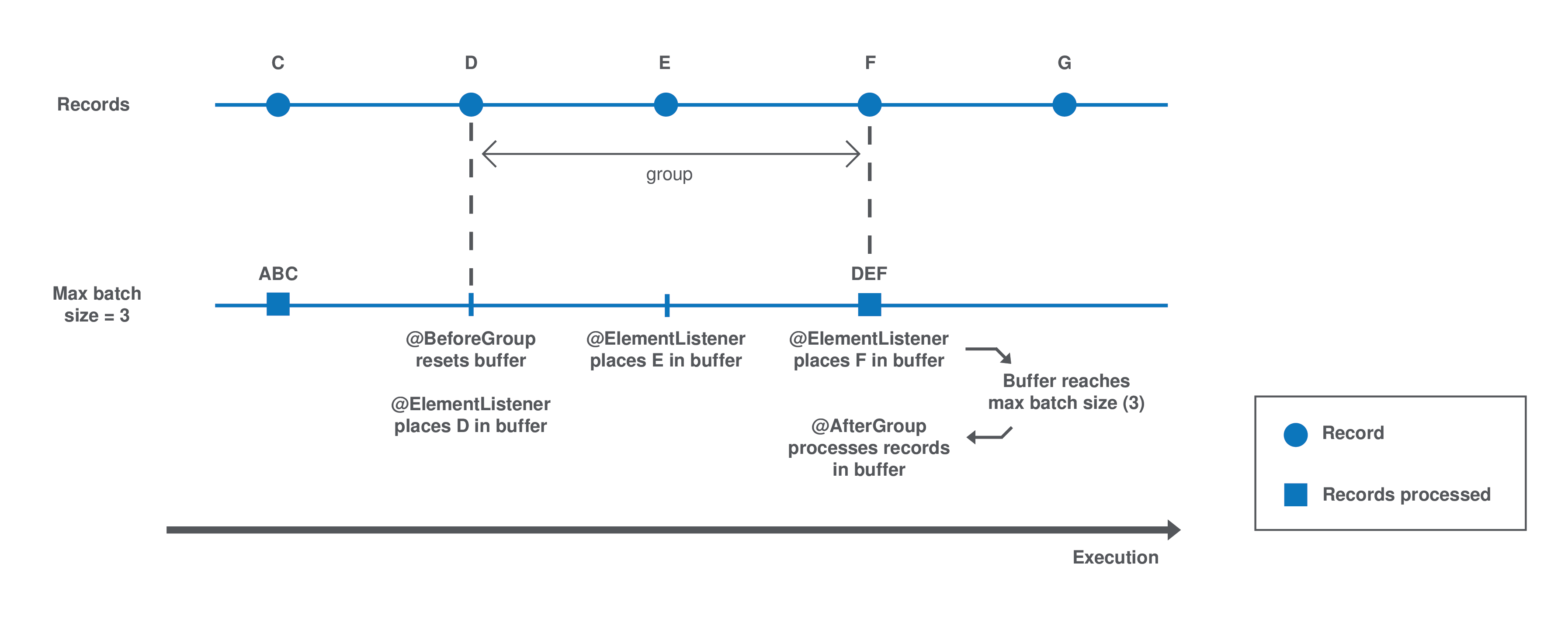

Each group is processed as follows until there is no record left:

-

The

@BeforeGroupmethod resets a record buffer at the beginning of each group. -

The records of the group are assessed one by one and placed in the buffer as follows: The @ElementListener method tests if the buffer size is greater or equal to the defined

maxBatchSize. If it is, the records are processed. If not, then the current record is buffered. -

The previous step happens for all records of the group. Then the

@AfterGroupmethod tests if the buffer is empty.

You can define the following logic in the processor configuration:

import java.io.Serializable;

import java.util.ArrayList;

import java.util.Collection;

import javax.json.JsonObject;

import org.talend.sdk.component.api.processor.AfterGroup;

import org.talend.sdk.component.api.processor.BeforeGroup;

import org.talend.sdk.component.api.processor.ElementListener;

import org.talend.sdk.component.api.processor.Processor;

@Processor(name = "BulkOutputDemo")

public class BulkProcessor implements Serializable {

private Collection<JsonObject> buffer;

@BeforeGroup

public void begin() {

buffer = new ArrayList<>();

}

@ElementListener

public void bufferize(final JsonObject object) {

buffer.add(object);

}

@AfterGroup

public void commit() {

// save buffer records at once (bulk)

}

}You can learn more about processors in this document.

Defining an output

What is an output

An Output is a Processor that does not return any data.

Conceptually, an output is a data listener. It matches the concept of processor. Being the last component of the execution chain or returning no data makes your processor an output component:

@ElementListener

public void store(final MyData data) {

// ...

}Defining component layout and configuration

The component configuration is defined in the <component_name>Configuration.java file of the package. It consists in defining the configurable part of the component that will be displayed in the UI.

To do that, you can specify parameters. When you import the project in your IDE, the parameters that you have specified in the starter are already present.

Parameter name

Components are configured using their constructor parameters. All parameters can be marked with the @Option property, which lets you give a name to them.

For the name to be correct, you must follow these guidelines:

-

Use a valid Java name.

-

Do not include any

.character in it. -

Do not start the name with a

$. -

Defining a name is optional. If you don’t set a specific name, it defaults to the bytecode name. This can require you to compile with a

-parameterflag to avoid ending up with names such asarg0,arg1, and so on.

| Option name | Valid |

|---|---|

myName |

|

my_name |

|

my.name |

|

$myName |

Parameter types

Parameter types can be primitives or complex objects with fields decorated with @Option exactly like method parameters.

| It is recommended to use simple models which can be serialized in order to ease serialized component implementations. |

For example:

class FileFormat implements Serializable {

@Option("type")

private FileType type = FileType.CSV;

@Option("max-records")

private int maxRecords = 1024;

}

@PartitionMapper(name = "file-reader")

public MyFileReader(@Option("file-path") final File file,

@Option("file-format") final FileFormat format) {

// ...

}Using this kind of API makes the configuration extensible and component-oriented, which allows you to define all you need.

The instantiation of the parameters is done from the properties passed to the component.

Mapping complex objects

The conversion from property to object uses the Dot notation.

For example, assuming the method parameter was configured with @Option("file"):

file.path = /home/user/input.csv

file.format = CSVmatches

public class FileOptions {

@Option("path")

private File path;

@Option("format")

private Format format;

}List case

Lists rely on an indexed syntax to define their elements.

For example, assuming that the list parameter is named files and that the elements are of the FileOptions type, you can define a list of two elements as follows:

files[0].path = /home/user/input1.csv

files[0].format = CSV

files[1].path = /home/user/input2.xml

files[1].format = EXCELMap case

Similarly to the list case, the map uses .key[index] and .value[index] to represent its keys and values:

// Map<String, FileOptions>

files.key[0] = first-file

files.value[0].path = /home/user/input1.csv

files.value[0].type = CSV

files.key[1] = second-file

files.value[1].path = /home/user/input2.xml

files.value[1].type = EXCEL// Map<FileOptions, String>

files.key[0].path = /home/user/input1.csv

files.key[0].type = CSV

files.value[0] = first-file

files.key[1].path = /home/user/input2.xml

files.key[1].type = EXCEL

files.value[1] = second-file| Avoid using the Map type. Instead, prefer configuring your component with an object if this is possible. |

Defining Constraints and validations on the configuration

You can use metadata to specify that a field is required or has a minimum size, and so on. This is done using the validation metadata in the org.talend.sdk.component.api.configuration.constraint package:

MaxLength

Ensure the decorated option size is validated with a higher bound.

-

API:

@org.talend.sdk.component.api.configuration.constraint.Max -

Name:

maxLength -

Parameter Type:

double -

Supported Types: —

java.lang.CharSequence -

Sample:

{

"validation::maxLength":"12.34"

}MinLength

Ensure the decorated option size is validated with a lower bound.

-

API:

@org.talend.sdk.component.api.configuration.constraint.Min -

Name:

minLength -

Parameter Type:

double -

Supported Types: —

java.lang.CharSequence -

Sample:

{

"validation::minLength":"12.34"

}Pattern

Validate the decorated string with a javascript pattern (even into the Studio).

-

API:

@org.talend.sdk.component.api.configuration.constraint.Pattern -

Name:

pattern -

Parameter Type:

java.lang.string -

Supported Types: —

java.lang.CharSequence -

Sample:

{

"validation::pattern":"test"

}Max

Ensure the decorated option size is validated with a higher bound.

-

API:

@org.talend.sdk.component.api.configuration.constraint.Max -

Name:

max -

Parameter Type:

double -

Supported Types: —

java.lang.Number—int—short—byte—long—double—float -

Sample:

{

"validation::max":"12.34"

}Min

Ensure the decorated option size is validated with a lower bound.

-

API:

@org.talend.sdk.component.api.configuration.constraint.Min -

Name:

min -

Parameter Type:

double -

Supported Types: —

java.lang.Number—int—short—byte—long—double—float -

Sample:

{

"validation::min":"12.34"

}Required

Mark the field as being mandatory.

-

API:

@org.talend.sdk.component.api.configuration.constraint.Required -

Name:

required -

Parameter Type:

- -

Supported Types: —

java.lang.Object -

Sample:

{

"validation::required":"true"

}MaxItems

Ensure the decorated option size is validated with a higher bound.

-

API:

@org.talend.sdk.component.api.configuration.constraint.Max -

Name:

maxItems -

Parameter Type:

double -

Supported Types: —

java.util.Collection -

Sample:

{

"validation::maxItems":"12.34"

}MinItems

Ensure the decorated option size is validated with a lower bound.

-

API:

@org.talend.sdk.component.api.configuration.constraint.Min -

Name:

minItems -

Parameter Type:

double -

Supported Types: —

java.util.Collection -

Sample:

{

"validation::minItems":"12.34"

}UniqueItems

Ensure the elements of the collection must be distinct (kind of set).

-

API:

@org.talend.sdk.component.api.configuration.constraint.Uniques -

Name:

uniqueItems -

Parameter Type:

- -

Supported Types: —

java.util.Collection -

Sample:

{

"validation::uniqueItems":"true"

}

When using the programmatic API, metadata is prefixed by tcomp::. This prefix is stripped in the web for convenience, and the table above uses the web keys.

|

Also note that these validations are executed before the runtime is started (when loading the component instance) and that the execution will fail if they don’t pass.

If it breaks your application, you can disable that validation on the JVM by setting the system property talend.component.configuration.validation.skip to true.

Marking a configuration as dataset or datastore

It is common to classify the incoming data. It is similar to tagging data with several types. Data can commonly be categorized as follows:

-

Datastore: The data you need to connect to the backend.

-

Dataset: A datastore coupled with the data you need to execute an action.

Dataset

Mark a model (complex object) as being a dataset.

-

API: @org.talend.sdk.component.api.configuration.type.DataSet

-

Sample:

{

"tcomp::configurationtype::name":"test",

"tcomp::configurationtype::type":"dataset"

}Datastore

Mark a model (complex object) as being a datastore (connection to a backend).

-

API: @org.talend.sdk.component.api.configuration.type.DataStore

-

Sample:

{

"tcomp::configurationtype::name":"test",

"tcomp::configurationtype::type":"datastore"

}| The component family associated with a configuration type (datastore/dataset) is always the one related to the component using that configuration. |

Those configuration types can be composed to provide one configuration item. For example, a dataset type often needs a datastore type to be provided. A datastore type (that provides the connection information) is used to create a dataset type.

Those configuration types are also used at design time to create shared configurations that can be stored and used at runtime.

For example, in the case of a relational database that supports JDBC:

-

A datastore can be made of:

-

a JDBC URL

-

a username

-

a password.

-

-

A dataset can be made of:

-

a datastore (that provides the data required to connect to the database)

-

a table name

-

data.

-

The component server scans all configuration types and returns a configuration type index. This index can be used for the integration into the targeted platforms (Studio, web applications, and so on).

The configuration type index is represented as a flat tree that contains all the configuration types, which themselves are represented as nodes and indexed by ID.

Every node can point to other nodes. This relation is represented as an array of edges that provides the child IDs.

As an illustration, a configuration type index for the example above can be defined as follows:

{nodes: {

"idForDstore": { datastore:"datastore data", edges:[id:"idForDset"] },

"idForDset": { dataset:"dataset data" }

}

}|

The model allows you to define meta information without restrictions. However, it is highly recommended to ensure that:

|

Defining links between properties

If you need to define a binding between properties, you can use a set of annotations:

ActiveIf

If the evaluation of the element at the location matches value then the element is considered active, otherwise it is deactivated.

-

API:

@org.talend.sdk.component.api.configuration.condition.ActiveIf -

Type:

if -

Sample:

{

"condition::if::evaluationStrategy":"DEFAULT",

"condition::if::negate":"false",

"condition::if::target":"test",

"condition::if::value":"value1,value2"

}ActiveIfs

Allows to set multiple visibility conditions on the same property.

-

API:

@org.talend.sdk.component.api.configuration.condition.ActiveIfs -

Type:

ifs -

Sample:

{

"condition::if::evaluationStrategy::0":"DEFAULT",

"condition::if::evaluationStrategy::1":"LENGTH",

"condition::if::negate::0":"false",

"condition::if::negate::1":"true",

"condition::if::target::0":"sibling1",

"condition::if::target::1":"../../other",

"condition::if::value::0":"value1,value2",

"condition::if::value::1":"SELECTED",

"condition::ifs::operator":"AND"

}The target element location is specified as a relative path to the current location, using Unix path characters.

The configuration class delimiter is /.

The parent configuration class is specified by ...

Thus, ../targetProperty denotes a property, which is located in the parent configuration class and is named targetProperty.

When using the programmatic API, metadata is prefixed with tcomp::. This prefix is stripped in the web for convenience, and the previous table uses the web keys.

|

Adding hints about the rendering

In some cases, you may need to add metadata about the configuration to let the UI render that configuration properly.

For example, a password value that must be hidden and not a simple clear input box. For these cases - if you want to change the UI rendering - you can use a particular set of annotations:

@DefaultValue

Provide a default value the UI can use - only for primitive fields.

-

API:

@org.talend.sdk.component.api.configuration.ui.DefaultValue

Sample:

{

"ui::defaultvalue::value":"test"

}@OptionsOrder

Allows to sort a class properties.

-

API:

@org.talend.sdk.component.api.configuration.ui.OptionsOrder

Sample:

{

"ui::optionsorder::value":"value1,value2"

}@AutoLayout

Request the rendered to do what it thinks is best.

-

API:

@org.talend.sdk.component.api.configuration.ui.layout.AutoLayout

Sample:

{

"ui::autolayout":"true"

}@GridLayout

Advanced layout to place properties by row, this is exclusive with @OptionsOrder.

-

API:

@org.talend.sdk.component.api.configuration.ui.layout.GridLayout

Sample:

{

"ui::gridlayout::value1::value":"first|second,third",

"ui::gridlayout::value2::value":"first|second,third"

}@GridLayouts

Allow to configure multiple grid layouts on the same class, qualified with a classifier (name)

-

API:

@org.talend.sdk.component.api.configuration.ui.layout.GridLayouts

Sample:

{

"ui::gridlayout::Advanced::value":"another",

"ui::gridlayout::Main::value":"first|second,third"

}@HorizontalLayout

Put on a configuration class it notifies the UI an horizontal layout is preferred.

-

API:

@org.talend.sdk.component.api.configuration.ui.layout.HorizontalLayout

Sample:

{

"ui::horizontallayout":"true"

}@VerticalLayout

Put on a configuration class it notifies the UI a vertical layout is preferred.

-

API:

@org.talend.sdk.component.api.configuration.ui.layout.VerticalLayout

Sample:

{

"ui::verticallayout":"true"

}@Code

Mark a field as being represented by some code widget (vs textarea for instance).

-

API:

@org.talend.sdk.component.api.configuration.ui.widget.Code

Sample:

{

"ui::code::value":"test"

}@Credential

Mark a field as being a credential. It is typically used to hide the value in the UI.

-

API:

@org.talend.sdk.component.api.configuration.ui.widget.Credential

Sample:

{

"ui::credential":"true"

}@Structure

Mark a List<String> or Map<String, String> field as being represented as the component data selector (field names generally or field names as key and type as value).

-

API:

@org.talend.sdk.component.api.configuration.ui.widget.Structure

Sample:

{

"ui::structure::discoverSchema":"test",

"ui::structure::type":"IN",

"ui::structure::value":"test"

}@TextArea

Mark a field as being represented by a textarea(multiline text input).

-

API:

@org.talend.sdk.component.api.configuration.ui.widget.TextArea

Sample:

{

"ui::textarea":"true"

}

When using the programmatic API, metadata is prefixed with tcomp::. This prefix is stripped in the web for convenience, and the previous table uses the web keys.

|

You can also check this example about masking credentials.

Target support should cover org.talend.core.model.process.EParameterFieldType but you need to ensure that the web renderer is able to handle the same widgets.

Internationalizing components

In common cases, you can store messages using a properties file in your component module to use internationalization.

This properties file must be stored in the same package as the related components and named Messages. For example, org.talend.demo.MyComponent uses org.talend.demo.Messages[locale].properties.

This file already exists when you import a project generated from the starter.

Default components keys

Out of the box components are internationalized using the same location logic for the resource bundle. The supported keys are:

| Name Pattern | Description |

|---|---|

${family}._displayName |

Display name of the family |

${family}.${category}._category |

Display name of the category |

${family}.${configurationType}.${name}._displayName |

Display name of a configuration type (dataStore or dataSet). Important: this key is read from the family package (not the class package), to unify the localization of the metadata. |

${family}.actions.${actionType}.${actionName}._displayName |

Display name of an action of the family. Specifying it is optional and will default on the action name if not set. |

${family}.${component_name}._displayName |

Display name of the component (used by the GUIs) |

${property_path}._displayName |

Display name of the option. |

${simple_class_name}.${property_name}._displayName |

Display name of the option using its class name. |

${enum_simple_class_name}.${enum_name}._displayName |

Display name of the |

${property_path}._placeholder |

Placeholder of the option. |

Example of configuration for a component named list and belonging to the memory family (@Emitter(family = "memory", name = "list")):

memory.list._displayName = Memory ListInternationalizing a configuration class

Configuration classes can be translated using the simple class name in the messages properties file. This is useful in case of common configurations shared by multiple components.

For example, if you have a configuration class as follows :

public class MyConfig {

@Option

private String host;

@Option

private int port;

}You can give it a translatable display name by adding ${simple_class_name}.${property_name}._displayName to Messages.properties under the same package as the configuration class.

MyConfig.host._displayName = Server Host Name

MyConfig.host._placeholder = Enter Server Host Name...

MyConfig.port._displayName = Server Port

MyConfig.port._placeholder = Enter Server Port...| If you have a display name using the property path, it overrides the display name defined using the simple class name. This rule also applies to placeholders. |

Managing component versions and migration

If some changes impact the configuration, they can be managed through a migration handler at the component level (enabling trans-model migration support).

The @Version annotation supports a migrationHandler method which migrates the incoming configuration to the current model.

For example, if the filepath configuration entry from v1 changed to location in v2, you can remap the value in your MigrationHandler implementation.

A best practice is to split migrations into services that you can inject in the migration handler (through constructor) rather than managing all migrations directly in the handler. For example:

// full component code structure skipped for brievity, kept only migration part

@Version(value = 3, migrationHandler = MyComponent.Migrations.class)

public class MyComponent {

// the component code...

private interface VersionConfigurationHandler {

Map<String, String> migrate(Map<String, String> incomingData);

}

public static class Migrations {

private final List<VersionConfigurationHandler> handlers;

// VersionConfigurationHandler implementations are decorated with @Service

public Migrations(final List<VersionConfigurationHandler> migrations) {

this.handlers = migrations;

this.handlers.sort(/*some custom logic*/);

}

@Override

public Map<String, String> migrate(int incomingVersion, Map<String, String> incomingData) {

Map<String, String> out = incomingData;

for (MigrationHandler handler : handlers) {

out = handler.migrate(out);

}

}

}

}What is important to notice in this snippet is the fact that you can organize your migrations the way that best fits your component.

If you need to apply migrations in a specific order, make sure that they are sorted.

|

Consider this API as a migration callback rather than a migration API. Adjust the migration code structure you need behind the MigrationHandler, based on your component requirements, using service injection.

|

Implementing batch processing

Depending on several requirements, including the system capacity and business needs, a processor can process records differently.

For example, for real-time or near-real time processing, it is more interesting to process small batches of data more often. On the other hand, in case of one-time processing, it is more optimal to adapt the way the component handles batches of data according to the system capacity.

By default, the runtime automatically estimates a group size that it considers good, according to the system capacity, to process the data. This group size can sometimes be too big and not optimal for your needs or for your system to handle effectively and correctly.

Users can then customize this size from the component settings in Talend Studio, by specifying a maxBatchSize that adapts the size of each group of data to be processed.

| The estimated group size logic is automatically implemented when a component is deployed to a Talend application. Besides defining the @BeforeGroup and @AfterGroup logic detailed below, no action is required on the implementation side of the component. |

The component batch processes the data as follows:

-

Case 1 - No

maxBatchSizeis specified in the component configuration. The runtime estimates a group size of 4. Records are processed by groups of 4. -

Case 2 - The runtime estimates a group size of 4 but a

maxBatchSizeof 3 is specified in the component configuration. The system adapts the group size to 3. Records are processed by groups of 3.

Each group is processed as follows until there is no record left:

-

The

@BeforeGroupmethod resets a record buffer at the beginning of each group. -

The records of the group are assessed one by one and placed in the buffer as follows: The @ElementListener method tests if the buffer size is greater or equal to the defined

maxBatchSize. If it is, the records are processed. If not, then the current record is buffered. -

The previous step happens for all records of the group. Then the

@AfterGroupmethod tests if the buffer is empty.

You can define the following logic in the processor configuration:

import java.io.Serializable;

import java.util.ArrayList;

import java.util.Collection;

import javax.json.JsonObject;

import org.talend.sdk.component.api.processor.AfterGroup;

import org.talend.sdk.component.api.processor.BeforeGroup;

import org.talend.sdk.component.api.processor.ElementListener;

import org.talend.sdk.component.api.processor.Processor;

@Processor(name = "BulkOutputDemo")

public class BulkProcessor implements Serializable {

private Collection<JsonObject> buffer;

@BeforeGroup

public void begin() {

buffer = new ArrayList<>();

}

@ElementListener

public void bufferize(final JsonObject object) {

buffer.add(object);

}

@AfterGroup

public void commit() {

// save buffer records at once (bulk)

}

}You can learn more about processors in this document.

Building components with Maven

To develop new components, Talend Component Kit requires a build tool in which you will import the component project generated from the starter.

You will then be able to install and deploy it to Talend applications. A Talend Component Kit plugin is available for each of the supported build tools.

talend-component-maven-plugin helps you write components that match best practices and generate transparently metadata used by Talend Studio.

You can use it as follows:

<plugin>

<groupId>org.talend.sdk.component</groupId>

<artifactId>talend-component-maven-plugin</artifactId>

<version>${component.version}</version>

</plugin>This plugin is also an extension so you can declare it in your build/extensions block as:

<extension>

<groupId>org.talend.sdk.component</groupId>

<artifactId>talend-component-maven-plugin</artifactId>

<version>${component.version}</version>

</extension>Used as an extension, the goals detailed below will be set up.

You can run the following command from the root of the project, by adapting it with each goal name, parameters and values:

$ mvn talend-component:<name_of_the_goal>[:<execution id>] -D<param_user_property>=<param_value>Dependencies

The first goal is a shortcut for the maven-dependency-plugin. It creates the TALEND-INF/dependencies.txt file with the compile and runtime dependencies, allowing the component to use it at runtime:

<plugin>

<groupId>org.talend.sdk.component</groupId>

<artifactId>talend-component-maven-plugin</artifactId>

<version>${component.version}</version>

<executions>

<execution>

<id>talend-dependencies</id>

<goals>

<goal>dependencies</goal>

</goals>

</execution>

</executions>

</plugin>Validating the component programming model

This goal helps you validate the common programming model of the component. To activate it, you can use following execution definition:

<plugin>

<groupId>org.talend.sdk.component</groupId>

<artifactId>talend-component-maven-plugin</artifactId>

<version>${component.version}</version>

<executions>

<execution>

<id>talend-component-validate</id>

<goals>

<goal>validate</goal>

</goals>

</execution>

</executions>

</plugin>It is bound to the process-classes phase by default. When executed, it performs several validations that can be disabled by setting the corresponding flags to false in the <configuration> block of the execution:

| Name | Description | User property | Default |

|---|---|---|---|

validateInternationalization |

Validates that resource bundles are presents and contain commonly used keys (for example, |

|

true |

validateModel |

Ensures that components pass validations of the |

|

true |

validateSerializable |

Ensures that components are |

|

true |

validateMetadata |

Ensures that components have an |

|

true |

validateDataStore |

Ensures that any |

|

true |

validateDataSet |

Ensures that any |

|

true |

validateComponent |

Ensures that the native programming model is respected. You can disable it when using another programming model like Beam. |

|

true |

validateActions |

Validates action signatures for actions not tolerating dynamic binding ( |

|

true |

validateFamily |

Validates the family by verifying that the package containing the |

|

true |

validateDocumentation |

Ensures that all components and |

|

true |

validateLayout |

Ensures that the layout is referencing existing options and properties. |

|

true |

validateOptionNames |

Ensures that the option names are compliant with the framework. It is highly recommended and safer to keep it set to |

|

true |

Generating the component documentation

The asciidoc goal generates an Asciidoc file documenting your component from the configuration model (@Option) and the @Documentation property that you can add to options and to the component itself.

<plugin>

<groupId>org.talend.sdk.component</groupId>

<artifactId>talend-component-maven-plugin</artifactId>

<version>${component.version}</version>

<executions>

<execution>

<id>talend-component-documentation</id>

<goals>

<goal>asciidoc</goal>

</goals>

</execution>

</executions>

</plugin>| Name | Description | User property | Default |

|---|---|---|---|

level |

Level of the root title. |

|

2 ( |

output |

Output folder path. It is recommended to keep it to the default value. |

|

|

formats |

Map of the renderings to do. Keys are the format ( |

|

- |

attributes |

Asciidoctor attributes to use for the rendering when formats is set. |

|

- |

templateEngine |

Template engine configuration for the rendering. |

|

- |

templateDir |

Template directory for the rendering. |

|

- |

title |

Document title. |

|

${project.name} |

version |

The component version. It defaults to the pom version |

|

${project.version} |

workDir |

The template directory for the Asciidoctor rendering - if 'formats' is set. |

|

${project.build.directory}/talend-component/workdir |

attachDocumentations |

Allows to attach (and deploy) the documentations ( |

|

true |

htmlAndPdf |

If you use the plugin as an extension, you can add this property and set it to |

|

false |

Rendering your documentation

To render the generated documentation in HTML or PDF, you can use the Asciidoctor Maven plugin (or Gradle equivalent). You can configure both executions if you want both HTML and PDF renderings.

Make sure to execute the rendering after the documentation generation.

HTML rendering

If you prefer a HTML rendering, you can configure the following execution in the asciidoctor plugin. The example below:

-

Generates the components documentation in

target/classes/TALEND-INF/documentation.adoc. -

Renders the documentation as an HTML file stored in

target/documentation/documentation.html.

<plugin> (1)

<groupId>org.talend.sdk.component</groupId>

<artifactId>talend-component-maven-plugin</artifactId>

<version>${talend-component-kit.version}</version>

<executions>

<execution>

<id>documentation</id>

<phase>prepare-package</phase>

<goals>

<goal>asciidoc</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin> (2)

<groupId>org.asciidoctor</groupId>

<artifactId>asciidoctor-maven-plugin</artifactId>

<version>1.5.6</version>

<executions>

<execution>

<id>doc-html</id>

<phase>prepare-package</phase>

<goals>

<goal>process-asciidoc</goal>

</goals>

<configuration>

<sourceDirectory>${project.build.outputDirectory}/TALEND-INF</sourceDirectory>

<sourceDocumentName>documentation.adoc</sourceDocumentName>

<outputDirectory>${project.build.directory}/documentation</outputDirectory>

<backend>html5</backend>

</configuration>

</execution>

</executions>

</plugin>PDF rendering

If you prefer a PDF rendering, you can configure the following execution in the asciidoctor plugin:

<plugin>

<groupId>org.asciidoctor</groupId>

<artifactId>asciidoctor-maven-plugin</artifactId>

<version>1.5.6</version>

<executions>

<execution>

<id>doc-html</id>

<phase>prepare-package</phase>

<goals>

<goal>process-asciidoc</goal>

</goals>

<configuration>

<sourceDirectory>${project.build.outputDirectory}/TALEND-INF</sourceDirectory>

<sourceDocumentName>documentation.adoc</sourceDocumentName>

<outputDirectory>${project.build.directory}/documentation</outputDirectory>

<backend>pdf</backend>

</configuration>

</execution>

</executions>

<dependencies>

<dependency>

<groupId>org.asciidoctor</groupId>

<artifactId>asciidoctorj-pdf</artifactId>

<version>1.5.0-alpha.16</version>

</dependency>

</dependencies>

</plugin>Including the documentation into a document

If you want to add some more content or a title, you can include the generated document into

another document using Asciidoc include directive.

For example:

= Super Components

Super Writer

:toc:

:toclevels: 3

:source-highlighter: prettify

:numbered:

:icons: font

:hide-uri-scheme:

:imagesdir: images

include::{generated_doc}/documentation.adoc[]To be able to do that, you need to pass the generated_doc attribute to the plugin. For example:

<plugin>

<groupId>org.asciidoctor</groupId>

<artifactId>asciidoctor-maven-plugin</artifactId>

<version>1.5.6</version>

<executions>

<execution>

<id>doc-html</id>

<phase>prepare-package</phase>

<goals>

<goal>process-asciidoc</goal>

</goals>

<configuration>

<sourceDirectory>${project.basedir}/src/main/asciidoc</sourceDirectory>

<sourceDocumentName>my-main-doc.adoc</sourceDocumentName>

<outputDirectory>${project.build.directory}/documentation</outputDirectory>

<backend>html5</backend>

<attributes>